October 4, 2023 - Comments Off on Unmasking AI: The Complexities of Bias and Representation for South Asian Women

Unmasking AI: The Complexities of Bias and Representation for South Asian Women

By Anmol Irfan

Following the popularity of Lensa AI - the generative AI platform that created portraits for users - in Pakistan last year, AI and its impact on everyday users suddenly became a common topic. Despite the app being around since 2018, it’s only been with the recent boom of AI-related startups and AI becoming more accessible through platforms like chat GPT that people have begun to explore using these technologies every day - and having fun with them. But while most people pop onto these apps for a little bit of fun - whether that’s getting AI to make them look like their favourite characters or asking Chat GPT funny questions, experts in tech and AI have started to express concerns about the ethics of this growing popularity in AI.

Following the popularity of Lensa AI - the generative AI platform that created portraits for users - in Pakistan last year, AI and its impact on everyday users suddenly became a common topic. Despite the app being around since 2018, it’s only been with the recent boom of AI-related startups and AI becoming more accessible through platforms like chat GPT that people have begun to explore using these technologies every day - and having fun with them. But while most people pop onto these apps for a little bit of fun - whether that’s getting AI to make them look like their favourite characters or asking Chat GPT funny questions, experts in tech and AI have started to express concerns about the ethics of this growing popularity in AI.

Lensa was one app that started coming under fire - both for its use of artists’ techniques and its racist bias against users of colour. As Mansoor Rahimat Khan, the Founder of Beatoven Ai, points out, these aren’t necessarily concerns for tech companies when they start. “Most of these companies are coming out of the West, and equity is their last priority,” Khan says.

When it comes to our part of the world in particular, these things tend to get more complicated - especially when you add a gendered lens. Tech companies, especially those working with AI, rarely focus on the implications of their tech in the Global South, much less on how vulnerable communities here are impacted by their policies and content. For women in South Asia, privacy issues can be dangerous - and traditions around ‘honour’ and policing of women’s online and offline presence can be a very real threat. So, when there were questions about how the app was storing and using data, women started to worry. But beyond that, what’s equally worrying is the data these apps show us when we use them. As the usage of AI increases, so do the voices of its critics. Women of colour are shown in exoticised and sexualised ways, and even when they aren’t, there’s an obvious element of stereotypical depictions of how South Asian women are perceived in the West. Akanksha Singh, who was drawn to the trend out of curiosity and through some exciting results her friends got, decided not to regenerate her images for this article because of all the privacy issues she learned about between the first time she did this and now. Singh pointed out that race bias was evident in her images.

“What bothered me was that I was being whitewashed in all "contemporary" images. If I included a prompt that suggested I was in an office setting or similar, my skin tone was a few shades lighter. On the other hand, if I included a prompt to wear a sari, I was a deeper shade than my reference images. If I included a background prompt (I recall inputting "in an Indian hill station") -- something I did on Dream Studio, I think -- I got images with a stereotypically crumbly structure in the background. (I was expecting the lush greens of somewhere like Darjeeling rather than a structure.) This is not to say these don't exist -- they do -- but they're not the only representation of what an Indian hill station looks like,” Singh shares. She’s not alone in her experiences. In fact, stereotypical perceptions of South Asian women are being exacerbated quickly as AI images gain a foothold online.

One of the most common pages on X when you search “AI Pakistan” is one dedicated solely to highly sexualised AI-generated pictures of women. One post of Pakistani women shows four images of women dressed in traditional jewellery and clothes, except that their clothing and bodies are portrayed in an overly sexualised way, enhancing their bodies while also bringing attention to their exoticised outfits. Images like this play into Western tropes of South Asian women being exotic sexualised beings. In contrast, the other set of images we see boils South Asian women down to images of rural areas or seemingly “backward” lifestyles.

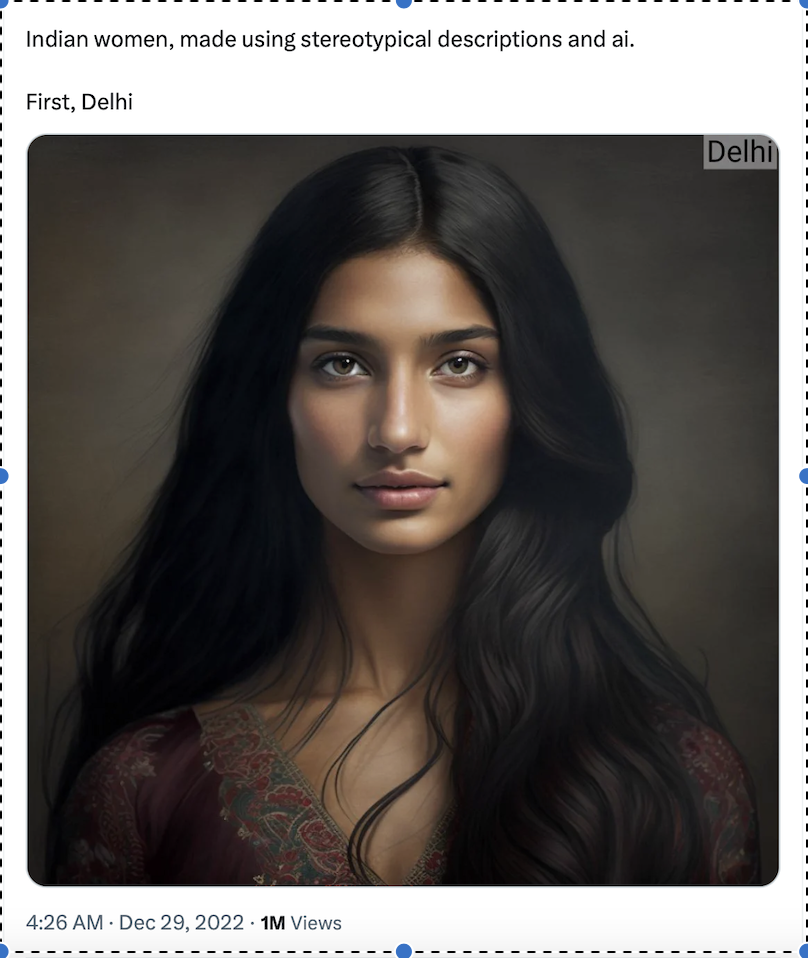

This post is one example of the way in which South Asian women are perceived. Most obvious is the focus on heavy jewellery and heavy clothes, which are rarely seen in everyday life. The obvious issue is that none of these countries have homogenous populations, so narrowing it down to one woman can also promote stereotypes of how Western audiences see these women.

But looking at a deeper thread of women from different areas in India can also be used as an example of the fact that the previous query itself was limited.

Still, even here, the women all have an unreal feel - maybe because their South Asian appearance seems overdone and doesn’t match realistic standards. The noses look too perfect, and the skin too smooth to be a woman I would meet on the streets.

This is another image that came up when I asked the AI generator to come up with when I put in the prompt Pakistani woman. The first thing that comes to notice is the desert-looking background - playing into tropes of countries like Pakistan being backward, underdeveloped countries where most people don’t have access to technology or any advancements.

This second image is also from the same prompt from a different website - and even here, the emphasis on jewellery and an enhancement of facial features can be seen. What’s also noticeable is the lightening and smoothing of skin tones in most AI-generated pictures.

Journalist Sajeer Shaikh also had a similar experience when generating her own images.

“I didn't like the images. I understand that they're a fantastical depiction of myself, but it seemed like the app zeroed in on insecurities I did not have, taking liberties with "enhancements." She shared. She was expecting otherworldly and warrior-esque images she could have fun with - but she ended up with insecurities she wasn’t expecting.

Of course, it only seems like the AI is making these decisions when all it’s doing is pulling from existing data sets, which means more concern needs to be shown regarding the data available for this tech to use in the first place.

Khan, who works particularly with audio - since his company uses AI to generate music - says these biases aren’t only limited to visuals but also words and audio. “We need to ask ourselves why Chat GPT is in English, why customer support has an American accent,” he says. A UN study also found that having AI assistants have female voices furthers gender biases.

Much of this comes from a lack of other information available. South Asian women are represented so little because they’re only seen within certain realms, making many South Asian women feel lesser when they try to do something outside of that arena.

“When I saw my images, I was overcome by sorrow because I felt a whitewashed extension of myself was depicted. It raised questions about why the software seemed to struggle to depict me as I am, with embellishments that didn't pertain to my skin or eye colour or even weight in some cases. You start questioning things about yourself, wondering, "What if I looked like this," and if that "what if" is a better version of yourself. It's not, of course, but AI protecting these depictions adds to the age-old issue of misrepresentation and perpetuating the idea of one kind of beautiful,” Shaikh says.

Many prominent Pakistani women like RJ Anoushey Ashraf and influencer Karachista also hopped onto the trend. Ashraf’s observations about how she could use the app to enhance her East Asian features - after finding out that her genes have East Asian traces - were both exciting and a bit scary. In a region where women are already objectified, what does it mean when certain features can be changed at will, and how does it impact perceptions South Asian women have of themselves or how others perceive them?

Mahnoor Qureshi, a freelance journalist, turned to AI tools and editors as the next step in editing her pictures. She used Snapchat and its tools frequently, sharing that being a victim of bullying since childhood - whether it was being told she looked bulky or comments on her nose - led her to these editing tools to make her feel better. She’s been using AI Photo Generator for five to six months now, but she noticed that it overdid her pictures and features in about 5-6% of her pictures, making her skin look doll-like and giving her unnaturally perfect teeth. Even though this was only in a small percentage of her pictures, Qureshi notices that “we are moving towards an unreal world, and we won’t accept ourselves and move towards surgeries and botox.”

“I believe the women are mostly insecure due to their skin texture. They want clear and even-toned skin. These images, generated by AI, consist of flawless, clear skin. Also, I have noticed that South Asian women are brown. But they really want to look one or two tones lighter than their original skin tone. The AI-generated images are 2 or 3 tones lighter than the original ones,” Qureshi adds.

In a digital world where the data hardly includes the diversity of South Asians, much less South Asian women - there’s a long way to go in challenging stereotypical data. Khan points out that these issues actually existed long before generative AI became common, and actually, its everyday use has allowed researchers and creators to identify it as a problem. “If you’re conscious of different regions, the outcome will be more impactful. Currently, there’s no representation of South Asian people or women inside these models - but I’d also like to say this is a very early phase for these technologies, where researchers have also identified these problems - so over a period of time, they do want to make sure these models are as unbiased as possible,” he says.

It’s also equally important to note that even just encompassing the diversity present under the term ‘South Asian women’ in a single article or one person’s approach is almost impossible. So, while the term is used broadly to signal the common problems women face in the region, it can’t begin to justify the experiences of vulnerable women across class, religion and background who have seen a less privileged life. Even when South Asian women are represented, it is often a privileged group - which globally can mean upper caste Hindus, but also depends on the country. In Pakistan, this often means upper-class, elite Muslim women - usually Punjabi.

“ I feel as though "South Asian" encompasses such a diverse group of people of varied races and ethnicities -- it wouldn’t be fair of me to speak on behalf of everyone. However, as someone from a more privileged and more represented (both globally and domestically) South Asian background, if AI image generation is presenting hiccoughs for me, then what is it doing with or to less represented South Asians?” Singh acknowledges this when talking about her thoughts on her AI images and how they made her feel.

She also rightfully points out that these images may be fun for many users, but the implications can be dangerous.

“Limited representation is never ideal. To draw on my point above, I will say that if we're using these same algorithms for things like law enforcement down the line, I worry we're not taking this seriously. This tech isn't all fluff, after all. If you saw that NYT article from a week or so ago -- where a different Black woman (who happened to be pregnant) was falsely accused of a crime due to faulty AI-powered facial recognition -- you know that we're not thinking of the Black Mirror potential this technology has.”

Published by: Digital Rights Foundation in Digital 50.50, Feminist e-magazine

Comments are closed.