October 4, 2023 - Comments Off on The Machine is rigged – a deep dive into AI-powered screening systems and the biases they perpetuate

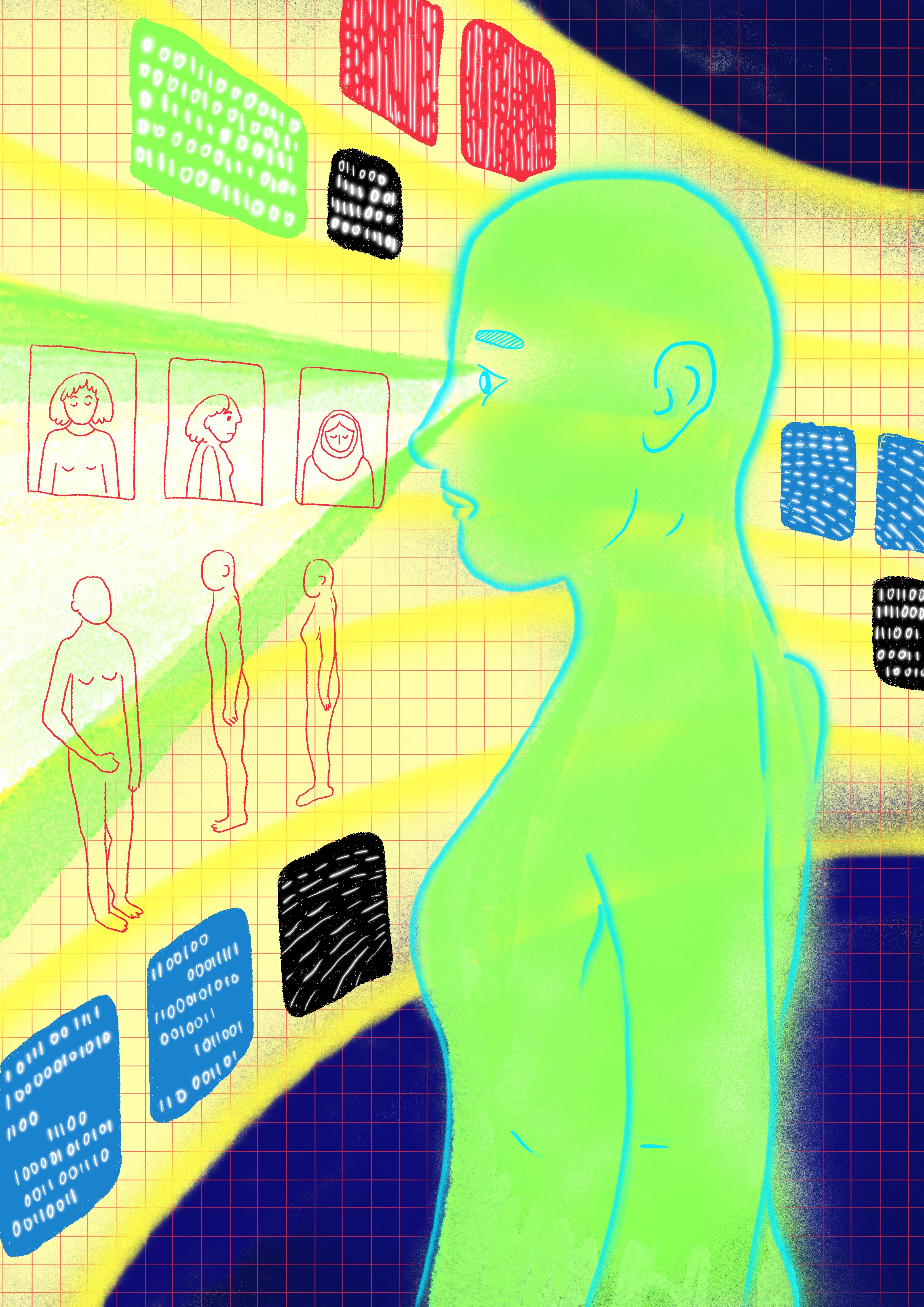

The Machine is rigged – a deep dive into AI-powered screening systems and the biases they perpetuate

By Nabeera Hussain

We live in a world largely dictated by algorithms. An algorithm predetermines what content we watch on Instagram; it determines which route we take on our daily work commute, and now algorithms decide whether we are eligible for a job or more likely to commit fraud.

We live in a world largely dictated by algorithms. An algorithm predetermines what content we watch on Instagram; it determines which route we take on our daily work commute, and now algorithms decide whether we are eligible for a job or more likely to commit fraud.

A recent joint investigation between WIRED and the media non-profit Lighthouse Reports revealed that a machine learning algorithm deployed by the city of Rotterdam in the Netherlands in 2017 to detect benefit fraud for citizens on state welfare discriminated based on gender and ethnicity.

The algorithm worked by assigning a welfare-baed 'risk score' to people. The score measured the likelihood of the individual committing welfare fraud based not only on factors like age, gender, and fluency in the Dutch language but also on more invasive data such as marital status, love life, hobbies and interests, appearance, financial background as well as emotional and behavioural responses.

By playing around with the input attributes of the risk-score algorithm, one could intuitively see that certain attributes contributed to a higher risk score than others. The investigative report analysed two fictional individuals: Sara, a single mother of two children, and Yusef, an unemployed immigrant with limited Dutch language proficiency. In both cases, being a female, immigrant, having children, having weak Dutch language skills, struggling to find a job, and coming out of a relationship as a single parent would drastically increase your risk score and decrease your chances of getting welfare compared to an average male. According to the report:

"If our typical Rotterdam male were female, she'd move up 4,542 spots closer to a possible investigation. If she had two children, she would move another 2,134 spaces up the list. If she were single but coming out of a long-term relationship, that's another 3,267 spots. Struggling to pay bills? That's another 1,959 places closer to potential investigation.

If he were a migrant who, like Yusef, spoke Arabic and not Dutch, he would move up 3,396 spots on the risk list. If he, too, lives in a majority migrant neighbourhood in an apartment with roommates, he would move 5,245 spots closer to potential investigation. If his caseworker is sceptical, he will find a job in Rotterdam; he’d move up another 3,290 spots.” 8

In Austria, an ASM algorithm, regarded as a "prime example for discrimination”10 by various researchers, was used to predict job seekers' chances to reintegrate into the Austrian job market. The algorithm was shown to attribute negative coefficients to applicants that were labelled as "female," "over the age of 50", or had some form of "health impairment." On the contrary, data points corresponding to the attributes "male, " under 30 years old", or "able-bodied" were assigned neutral coefficients that couldn't be categorised as positive or negative.

The coefficients were directly proportional to the "reintegration value," a marker determining the job seeker's likelihood of returning to the job market. The reintegration value was then used to categorize the applicants into different categories. The individuals with a low integration value were classified into group C, a special group that separated them from the job centre system and placed them into a support group for their psychosocial well-being. Individuals with a mediocre reintegration value were assigned to group B, which still made them ineligible to apply for a job directly but did entitle them to the most expensive job support.

The issue with using AI to automate and screen individuals lies mainly in the fact that the data used to train these models is sourced from the real world, where discrimination and bias are inherent. In our context, we can define ”biases" as a “discrepancy between what the epistemic foundation of a data-based algorithmic system is supposed to represent and what it does represent."2

Bias can be voluntary or involuntary and can be introduced at any stage of the machine-learning process. It could be in the form of mislabelled training data or data missing altogether. AI researcher Moritz Hardt argues that "dominant groups tend to be favored by automated decision-making processes because more data is available and therefore receive fairer, more representative, and accurate decisions/ predictions, than minority groups for which data sets are limited.”5

An automated hiring system built for a company in the US that is biased towards hiring white males would, with high probability, be predominantly trained on data from white and male individuals. If you use the system to screen individuals from other races and gender identities, the system would automatically reject them. This is because while the algorithm produces results with confidence, it is only doing so in accordance with the nature of the data supplied to it by human agents. It will not and can not question the relevance or morality of the data fed into it. Historical training data can only use people’s knowledge from the past to make decisions about people in the present.

The algorithms also don't factor in intersectionality, i.e., the idea that "differences in risk/merit, while acknowledged, are frequently due to systemic structural disadvantages such as racism, sexism, inter-generational poverty, the school-to-prison pipeline, mass incarceration, and the prison-industrial complex.”3 Algorithms, instead, assume the infra-marginality principle, which legitimises disparities in risk and merit between certain social groups by assuming that society is a level playing field.

To an algorithm like the one employed by Rotterdam or the ASM algorithm in Austria, the entire human experience is reduced to a singular numeric value or boolean variable. These values and variables are then fed to algorithmic "black boxes" riddled with human error and involuntary bias, which outputs what the person's destiny would be. While in the real world, a person who does not conform to predefined societal roles has the chance to challenge and persuade actual humans in the hiring team to look beyond their preconceived biases with an algorithm that is just a collection of binary 1s and 0s that becomes impossible.

To an algorithm trained on data reflecting discriminatory screening practices, the only thing that matters is the attributes used to predict an outcome; thus, the prospects of every individual are predetermined based on factors entirely beyond their control. The ASM algorithm need not look beyond the fact the candidate it is analysing is either a woman, a 50-year-old or has a disability. If any of the labels apply to you by virtue of being human, you are automatically a reject.

Proponents of such AI systems and algorithms argue that decision machines depict reality as it is. They are neutral algorithms that mimic real-world decision-making, and they should be appreciated for the efficiency with which they can do so. It is a well-known fact that human beings trust the decisions made by machines because of the facade of neutrality and objectivity that algorithms uphold. 9

However, the arguments made in favour of automated AI systems repeatedly ignore the fact that the pushback by policymakers and AI regulation bodies is not concerned with the algorithm's neutrality, accuracy, or efficiency - these are already established, but rather how, in a world that's already so unequal and divided, the same neutrality, accuracy, and efficiency would only go on to scale these inequalities up times ten and solidify the unequal power structures and social hierarchies in place. These systems, owing to the lack of transparency surrounding their use and inner workings, when used to determine the fates of real people, not only threaten the human experience but disregard several human rights.

One such right violated is the right to "informational self-determination." An individual - unaware that their gender, racial or sexual identity is used to classify them into castes and categories- cannot influence the classification methodology. They are stripped of their autonomy and free will to carve out their own destinies and choose their desired career paths since the algorithms have already decided their fate for them using unknown ways even to the people who made those algorithms.

In 2010, Eli Pariser introduced the term "filter bubble" to describe the phenomenon of tech consumers being stuck in their ideological echo chambers due to personalisation algorithms that only reinforce a person's beliefs rather than present them with alternate viewpoints. Just like recommendation algorithms in advertising "choose" your next product for you based on invasive tracking data that has been collected against your will through cookies and web trackers - data which is then used to compare buying histories of people with similar traits, demographics, and personality types as you - hiring algorithms, if not regulated, will get to "choose" your job for you too.

Researchers have proposed several interventions to make AI algorithms abide by an intersectional framework. The problem with prevailing bias mitigation methods is that they focus on discrimination along a single axis, i.e., gender, race, age, or income level. They treat each attribute as an independent entity rather than acknowledging how each attribute correlates intricately.

One proposed criterion to counter these gaps in bias mitigation is differential fairness, which "measures the fairness cost" of a machine learning algorithm. The cost is determined by the difference in the probabilities of the outcomes, i.e., "…regardless of the combination of protected attributes, the probabilities of the outcomes will be similar, as measured by the ratios versus other possible values of those variables. For example, the probability of being given a loan would be similar regardless of a protected group's intersecting combination of gender, race, and nationality…”. 7 There has also been research conducted on using pre-trained classifiers explicitly developed for low representation, minority classes in datasets which demonstrates that such an approach could improve the accuracy of results for both the intersectionality and non-intersectionality classes in a dataset. 6

Bodies like the EC have proposed several guidelines in their AI White Paper to make AI systems bias-free by focusing on the biases introduced through training datasets. "Requirements to take reasonable measures aimed at ensuring that such subsequent use of AI systems does not lead to outcomes entailing prohibited discrimination. These requirements could entail obligations to use data sets that are sufficiently representative, especially to ensure that all relevant dimensions of gender [...]are appropriately reflected in those data sets”.4

Thus, the first step towards fair and responsible AI screening systems is introducing and implementing proper legislation to mitigate intersectionality bias right at the beginning of the screening stage by correcting the data itself. It must be kept in mind that fairness and transparency must go beyond the algorithms themselves and also apply to the human data handlers and decision-makers because, ultimately, it's the way the data is used as opposed to the way it's produced that produces any tangible impact on civil society. Increasing female representation in AI research and development would help introduce diverse narratives, weaken generalisations, and create more accurate algorithms that produce more equitable outcomes.

Recently, a job applicant screening tool called Workday AI, which several Fortune 500 companies use, was sued in the US by a black applicant over 50 with a history of anxiety and depression. 1 The applicant claimed that the platform discriminates against black, disabled, and older job seekers. He was always rejected despite applying for "some 80 to 100 jobs" at different companies that he believed used Workday software. The lawsuit states that the Workday screening tools enable customers to make discriminatory and subjective judgments when reviewing and evaluating job applicants. If someone does not pass these screenings, they will not progress in hiring.

Some MNCs that use this platform also have a prominent presence in Pakistan, a country that doesn't even have a dedicated data protection law. One can only imagine the implications of the widespread use of such systems in a country with one of the worst human rights records. A country whose corporate sectors are riddled with allegations of nepotism, bias, workplace abuse, and discrimination, and a country that has a long way to go in achieving gender parity.

Citations

-

AIAAIC - Workday AI Job Screening Tool. www.aiaaic.org/aiaaic-repository/ai-and-algorithmic-incidents-and-controversies/workday-ai-job-screening-tool.

-

Barocas, Solon et al.: Fairness and machine learning. Limitations and Opportunities, fairmlbook.org 2019 (work in progress), introduction

-

Crenshaw, Kimberle. "Demarginalizing the Intersection of Race and Sex: A Black Feminist Critique of Antidiscrimination Doctrine, Feminist Theory, and Antiracist Politics [1989]." Feminist Legal Theory, 2018, pp. 57–80, https://doi.org/10.4324/9780429500480-5.

-

European Commission: White Paper On Artificial Intelligence. COM(2020) 65 final, 2020, p.19.

-

Hardt, Moritz: How big data is unfair. In: Medium 2014, https://medium.com/@mrtz/how-big-data-is-unfair-9aa544d739de (February 6, 2021).

-

Howard, Ayanna M. et al. "Addressing bias in machine learning algorithms: A pilot study on emotion recognition for intelligent systems." 2017 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO) (2017): 1-7.

-

Islam, Rashidul, et al. "Differential Fairness: An Intersectional Framework for Fair AI." Entropy, vol. 25, no. 4, Apr. 2023, p. 660. Crossref, https://doi.org/10.3390/e25040660.

-

Mehrotra, Dhruv, et al. "Inside the Suspicion Machine." WIRED, 6 Mar. 2023, www.wired.com/story/welfare-state-algorithms.

-

University of Georgia. "People may trust computers more than humans: New research shows that people are more likely to rely on algorithms." ScienceDaily. ScienceDaily, 13 April 2021. www.sciencedaily.com/releases/2021/04/210413114040.htm.

-

Wimmer, Barbara. “Der AMS-Algorithmus Ist Ein „Paradebeispiel Für Diskriminierung“.” Futurezone, 22 Oct. 2018, futurezone.at/netzpolitik/der-ams-algorithmus-ist-ein-paradebeispiel-fuer-diskriminierung/400147421.

Published by: Digital Rights Foundation in Digital 50.50, Feminist e-magazine

Comments are closed.