November 13, 2023 - Comments Off on Election Illusions: Deepfakes & Disinformation in Pakistan

Election Illusions: Deepfakes & Disinformation in Pakistan

Ayesha Khalid

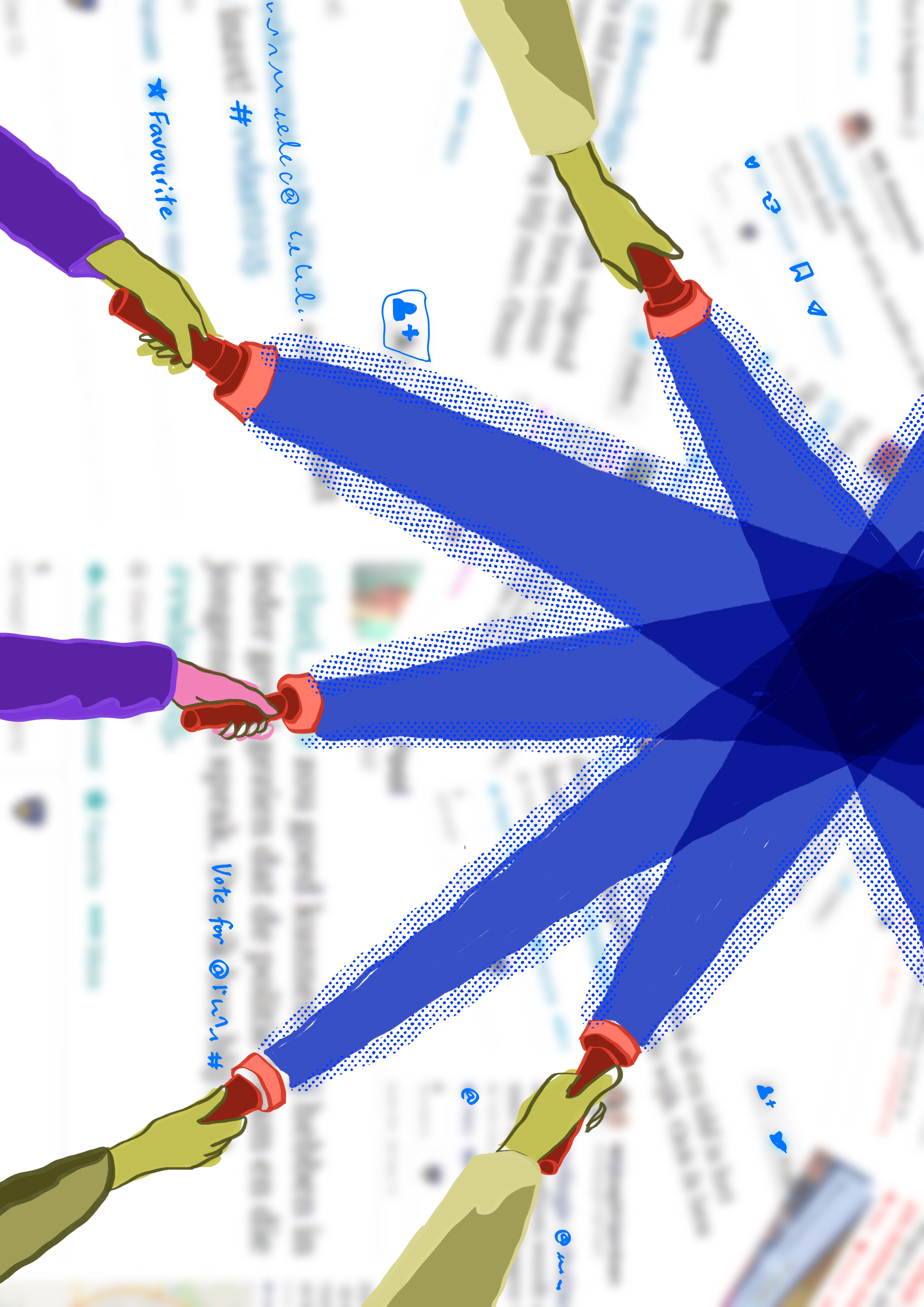

Imagine: Elections are just around the corner. You are scrolling through your social media feed, and a video of your favourite politician passionately addressing a large crowd captures your attention.

Imagine: Elections are just around the corner. You are scrolling through your social media feed, and a video of your favourite politician passionately addressing a large crowd captures your attention.

You watch intently. The person in the video appears nothing short of authentic. But here’s the catch: What if I told you the figure full of fervour in that video is not flesh and blood but a mere illusion conjured through generative artificial intelligence (AI) technology?

While the disclosure may initially strike you as whimsically surreal at first, there is no denying the fact that it has become a reality, surpassing even the most far-fetched imaginations. In an age where artificial intelligence has taken the world by storm — and despite reservations expressed by writers, artists, performers, and educators worldwide, and the ongoing heated debates about the threats and challenges persisting from such advanced tech — generative AI has become one of the most captivating and fascinating wonders in the world of technology.

AI products continue to astonish the world as they change the face of the global creative media industry. These advancements encompass everything from the generation of lifelike images of revered celebrities to the production of AI-crafted news anchors. And now, in a development that breaches the boundaries of political theatre, we are witnessing the emergence of AI-generated clones of political leaders. The potential implications of this technology are extensive and captivate those who engage with its boundless possibilities.

When virtuality permeates reality

Let’s begin with “Chat2024.com”, an initiative launched by Delphi, a digital cloning platform which offers users a unique opportunity to establish one-on-one connections with a range of famous personalities. These prominent faces may range from political figures, creators, and artists to renowned business magnates. Delphi excels in the art of replicating the complete personality and cognitive abilities of a real-life persona, custom-tailoring this duplicate to offer a personalised and interactive experience for its audience.

In a fascinating convergence of technology and human interaction, Delphi effectively renders these digital clones accessible to its audience. Its service, Chat 2024.com, stands as a digital forum where audiences have the distinct privilege of engaging in dialogue with these synthetic duplicates, gaining insights, perspectives, and even responses to queries of vital significance. For instance, users can not only pose pressing questions to their favourite politicians but can also avail an opportunity to witness these clones engage in dynamic political debates.

AI in political campaigns around the world

Before delving into the potential implications of AI in the upcoming elections in Pakistan, it is important to look at a few examples highlighting the potential of deepfakes around the world, including in some of the foremost economies. In the United States, for instance, the Florida governor launched a confrontational campaign against former president Donald Trump, using “deepfake” images that depict Mr. Trump embracing the governor. Forensic experts subsequently revealed that the AI-generated media, despite appearing remarkably realistic at first glance, was misleading and aimed at undermining Trump’s reputation. Similarly, another video, seemingly showing the current U.S. President, Joe Biden,making transphobic remarks, was shared on social media, where it spread like wildfire. Experts, however, debunked this video as a deepfake, too.

In Turkey, in the run-up to the general elections, President Erdogan showcased a manipulated video, which insinuated that his major contender, Kilicdaroglu, was linked to a militant organisation. Erdogan’s use of this manipulated footage highlights how AI-generated content can be harnessed for political purposes, even when its authenticity is subject to question. These incidents collectively illustrate the growing role of AI deepfakes in political campaigns, making it essential for policymakers in a country like Pakistan to address the ethical and regulatory challenges surrounding this technology, considering the upcoming elections.

But are we there yet?

AI and the upcoming Pakistani elections

In the context of Pakistan, a state where political divisions have taken on an increasingly fervent and polarised character — particularly following the ousting of the former prime minister Imran Khan and the return of the self-exiled disqualified premier Nawaz Sharif — the potential role of AI looms as an additional threat to the electoral processes. For a moment, just consider the prospect of engaging in a conversation, posing challenging questions, and witnessing heated debates with these AI-generated replicas of political leaders. It may sound bizarre, but considering past events, it is evident that the supporters of different political parties would wholeheartedly embrace such technological innovations, as who wouldn’t relish the spectacle of their favourite leader skillfully navigating the tumultuous waters of the electoral battle? While Pakistan hasn't traditionally indulged in the practice of one-on-one debates between rival electoral candidates, it is imperative to acknowledge the possibility of such a paradigm-shifting development in the country’s intricate political tapestry. The fusion of AI and politics is poised to redefine the way political discourse is conducted, and Pakistan, with its robust but fragile political environment, may be no exception to this sweeping change.

In the critical May 9 episode, Pakistan Tehreek Insaf (PTI) Chief Imran Khan shared a video titled “Sinf-e-Ahan,” — translated to “women of steel” — aimed at honouring and emphasising the role of women in what Khan described as a struggle for “real freedom”. This came almost a year after his much-debated removal from the Prime Minister’s Office. The incident sheds light on how political leaders, including influential and highly followed names Imran Khan himself, can inadvertently or intentionally embrace AI to shape their narratives, often without fully comprehending the consequences of spreading technology-facilitated disinformation. The AI-generated video in question, portraying a woman confronting the police, was generated using artificial intelligence (AI) software. International media outlets have conducted investigations confirming the fabrication of the said media.

This situation serves as a stark example of how politicians can potentially misuse AI in the electoral process. While the intention behind using AI-generated content may vary from creating emotional impact to building a particular narrative, the line between genuine information and AI-generated content can become increasingly blurred. In the context of elections, this could lead to the spread of false or manipulated information, which, in turn, could impact the choices made by voters.

Another pressing concern arises when considering OpenAI's implications on politics in the context of the upcoming general elections in Pakistan. OpenAI's software, ChatGPT — which was launched in October 2022 and changed the face of conventional academia and content marketing — is primarily trained on human feedback, which results in it inheriting human-like qualities, including the general political biases of its users. While this attribute might be useful for content generation, it becomes problematic when we consider its application in a court of law or, in this case, an election process. The impact of AI on democracy and democratic norms is complex and multifaceted. There are concerns that AI could be used to manipulate public opinion and undermine the democratic process, as well as exacerbate existing inequalities and reinforce biases.

Threats to marginalised communities and persecuted groups

It is important to mention that Pakistan drafted its first National Artificial Intelligence Policy in May 2023, marking a significant step in adopting AI’s potential for growth. However, the policy lacks a holistic national outlook, with concerns raised about limited input and consultation and exclusion of crucial stakeholders across the board. The existing bureaucratic aversion to digitisation poses a substantial hurdle to the policy's effective implementation. Also, the policy raises concerns about Internet access and connectivity restrictions that could disrupt market confidence and the emerging AI ecosystem. The draft policy seems to emphasise idealistic goals over practical considerations, suggesting a lack of substantive analysis. It is clear that the impact of AI on democracy will depend on how it is developed and deployed. It is, therefore, essential, that policymakers, researchers, and technologists work together to ensure that AI is used in a responsible and ethical manner that respects and upholds democratic values and principles.

Another crucial aspect to consider in the context of AI's influence on electoral campaigns is to explore the consequences of AI-generated harmful material on vulnerable and persecuted groups, especially the transgender community and women. In a country where conservatives and supporters of exclusionary agendas actively engage in coordinated disinformation campaigns against women in politics and gender-diverse communities, the implications of generative AI on electoral campaigns raise a fresh set of complex challenges.

The rise of online gender-based violence (OGBV) in the country further compounds these concerns. As AI advances and enables the generation of increasingly convincing deepfakes and manipulated content, the risk of harmful narratives targeting marginalised groups necessitates robust vigilance on online platforms. In a patriarchal society like Pakistan, where gender disparities and discrimination have long gripped the social fabric, the use of generative AI in shaping electoral narratives can perpetuate harmful stereotypes, intensify disinformation, and exacerbate online harassment and violence against vulnerable groups.

Shehzadi Rai, the first elected transgender member of the Karachi Municipal Council, has raised concerns about the potential threats posed by AI-generated content, particularly in online gender-based violence (OGBV) against transgender individuals. She highlights, “I have raised critical concerns about the threats posed by AI-generated content against my community. I’ve experienced it personally, facing AI-fueled trolling on platforms like Twitter, where trolls use AI to fabricate extensive threads with malicious intent, specifically targeting individuals from marginalised groups like ours. Recently, in another unfortunate incident, I received a doctored image of me on WhatsApp, showing me inappropriately without my clothes. The image was fake, and I assume it must have been created using AI technology. For me, the mere thought of such images going viral is alarming, as it could have severe consequences for my political career and personal safety.”

She adds, “I often find myself in a state of uncertainty, questioning whether these threats constitute an organised campaign to implicate me in spreading LGBTQI agenda or are merely part of the regular daily threats I encounter. However, one thing is clear – the potential harm caused by this unchecked AI technology, particularly in a conservative society like Pakistan, could seriously jeopardise my political career and personal life”.

Shahzadi shares that her hopes for government intervention are minimal, emphasising that tech giants operating AI technology have a fundamental responsibility to govern its harmful use. Her experience underscores the urgent need for AI regulation and ethical considerations to protect vulnerable and marginalised communities from the implications of AI-generated harmful content. This essentially tells us how AI can be a tool for political manipulation and a vector for reinforcing societal inequalities.

AI regulation in Pakistan

The situation steers us to question the stance of state authorities in addressing the possible consequences and risks associated with AI. What proactive steps can they take to address these issues, rather than remaining passive while the rapidly evolving world addresses security and safety concerns linked to AI? “It does get frustrating at times to see other countries moving towards the regulation of AI technology while we have yet to move on from draconian cyber legislation aimed at surveilling citizens and coercing tech firms into handing over personal user data to the government,” Usman Shahid, editor Digital Rights Monitor (DRM), says. “You look at countries like France, Australia, Japan, and the UK, and they have all started regulation of AI technology, reviewing security assessments from tech firms and investigating potential breaches. AI has already become a mainstream dialogue in the tech sphere in these markets.”

In Pakistan, ensuring an understanding of online disinformation requires local authorities to stay abreast of the latest trends in AI generative material. Monitoring disinformation can be particularly resource-intensive due to the vast volume of online information and social media content shared in local languages. In light of these challenges, the unbridled spread of false information manipulates public opinion, erodes trust in institutions, and sows discord within society. These divisive narratives can further exacerbate existing societal divisions, creating an environment of polarisation and mistrust. Therefore, the digital domain's vulnerability necessitates a prompt and effective response from the government and relevant bodies in Pakistan.

Hamaad Salik, a PhD in Information Systems Security, currently associated as a programs manager with the U.S. Department of Labor, says the upcoming elections in Pakistan face a severe challenge from AI-generated deepfakes and disinformation.

“AI introduces a constellation of significant cybersecurity threats with far-reaching implications for political parties, both as potential victims and wielders of these threats in the context of elections,” says Saalik. “The manipulation of AI-driven tactics and the resultant disruption of election results necessitate rigorous mitigation strategies.”

The use of AI, particularly in the form of deepfakes and cyberattacks, poses significant threats to political processes and campaigns, as it may tarnish the reputation of political figures. Cyberattacks, on the other hand, can lead to the theft of crucial information and disrupt the operations of rival political parties. AI-generated material can also distort public opinion and voter turnout, and it can also offer or facilitate hacking techniques that are more sophisticated and harder to detect.

According to Salik, AI-related challenges in Pakistan are further exacerbated by inadequate digital infrastructure, limited internet access and unreliable connectivity. The lack of resources and awareness at the governmental level also contributes to the complication of efforts that can overcome these challenges through tech-based solutions.

Beginning with the basics

For a fair and secure electoral process, it is essential for authorities to work towards adequate resources that enhance their capacity for monitoring disinformation and developing situational awareness for the public on artificial intelligence. It is an established fact that disinformation thrives on fundamental falsehoods that evolve and circulate continuously. To tackle this menace, the Ministry of Information Technology and Telecommunication (MoITT) can collaborate with officials from the Election Commission of Pakistan (ECP) to enhance public awareness and trust in the security of the electoral system.

However, the Commission must start collaborating with nonprofits working on tech-related issues in Pakistan, including disinformation and hate speech. This can significantly counter AI-driven misinformation around elections and help prevent manipulation attempts that can mislead the public. Journalists can also leverage resource packs and training modules developed by these organisations to comprehend better the challenges arising from AI and the tackling thereof. Additionally, ministries should scrutinise their official websites for any personal or organisational information hackers might exploit to create personalised phishing emails.

In an individual capacity, Salik says, tools such as Deepfake Lab and FaceForensics++ and initiatives like the DeepFake Detection Challenge can help people develop and enhance basic digital investigation skills. Fact-checking resources like Snopes, PolitiFact, and FactCheck.org can also play an essential role in helping counter the disinformation battle.

Published by: Digital Rights Foundation in Digital 50.50, Feminist e-magazine

Comments are closed.