Digital Rights Foundation participated in Seoul for the 3rd Summit For Democracy to bring Pakistan's perspective from the recent elections. The primary focus was on fostering democracy for future generations. Around 300 delegates including government officials, representatives from international organizations, academia, and civil society, gathered in Korea to discuss policy enhancements and strategic pathways to shape a better future for future generations.

In S4D3’s session 'Upholding Information Integrity Online to Reinforce Democracy and Human Rights' in Seoul, DRF's Senior Project Manager Hyra Basit, spoke about how Pakistan's 2024 General Elections highlighted the dark side of online discourse. DRF’s social media monitoring and intensive research during the election period were also highlighted.

Policy Initiatives:

International Women’s Day 2024

DRF’s team shared heartfelt stories about the remarkable women who have inspired them in celebration of International Women's Day 2024

In celebration of International Women’s Day, Privacy International sparked a meaningful dialogue that resonated deeply with us. They highlighted the remarkable women who are leading the charge in safeguarding privacy rights and fostering a more just world.

As part of this conversation, they asked the DRF team to share our reflections on the significance of privacy for women. It was an opportunity for us to explore and celebrate the unique perspectives and contributions of women in this vital field. Our comments are below:

Maya Ki Kahani | 16 Days of Activism

DRF launched an animated video telling the story of Maya. Maya is a trans woman who works in a department store to make a living. Maya is constantly harassed at work because customers take her photos and videos without her consent. Maya's images are then used to mock her online and spread around the internet. Why is Maya being ridiculed online for simply doing her job? Is Maya being attacked online because of her identity? Does the law protect Maya from this type of abuse?

You can watch the entire video here: https://www.youtube.com/watch?v=_i3VlCwgeq4

In the video, we aimed to educate the audience about important sections of the Prevention of Electronics Crimes Act (PECA) 2016 relating to Section 24 on taking pictures and videos without the consent of a natural person. The video also had information about section 20 of PECA around the dignity of a natural person and sharing content on social media containing false information and violating the privacy of a user.

DRF’s Cyber Harassment Helpline Guide

Have you or someone you know experienced any of the following?

- Your personal or private picture was uploaded online without your consent

- Your face was edited onto an inappropriate image

- Your information, such as your picture, phone number, or home address, was shared online to harass you

- A fake profile was created using your identity

- Your Facebook, Instagram, or WhatsApp account was hacked

If your answer is yes, and you're in need of assistance, please contact the Cyber Harassment Helpline at 0800-39393, and find out how we can help you.

DRF’s WhatsApp Disinformation Tipline

During election days, memes, videos, posts, and messages circulate widely on social media. Mithu is conducting research and he needs your help. If you come across any content spreading false or misleading information, especially regarding female or transgender politicians, please share it with Mithu via WhatsApp.

To send a message, click on the WhatsApp button on our Instagram and Facebook profiles or go to this link: https://wa.me/923013249539

Alternatively, you can directly send a WhatsApp message to this number:

0301 3249539

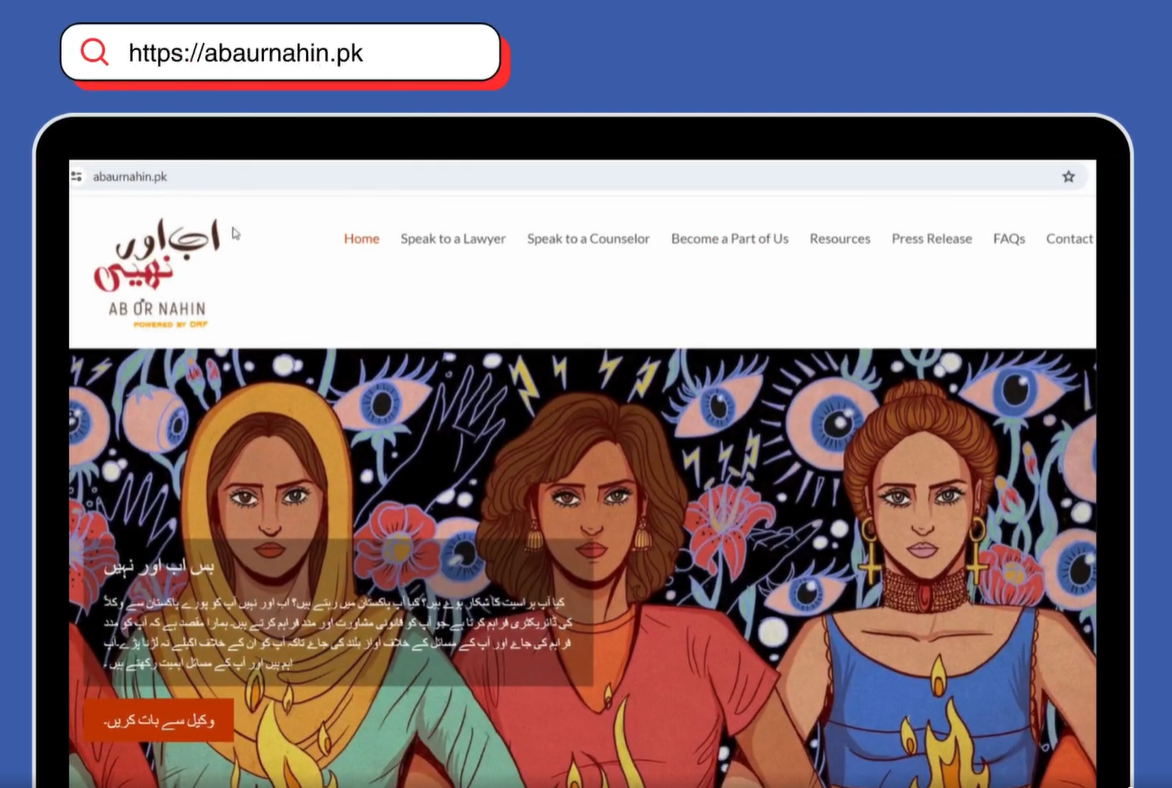

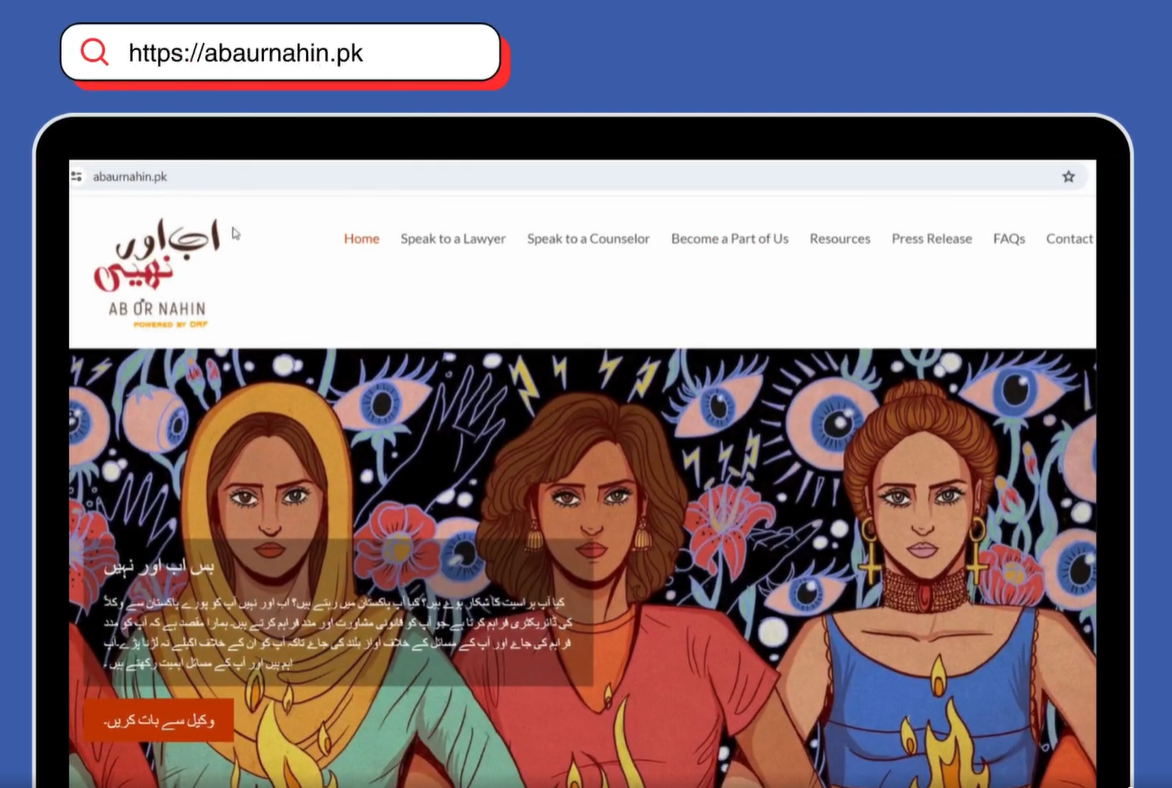

How to use ‘Ab Aur Nahin’

We launched a tutorial on how to use ‘Ab Aur Nahin’, DRF’s confidential legal and counselor support service specifically designed for survivors of harassment. You can easily access legal counsel and support tailored to your area through this user-friendly platform.

Click, connect, and take charge of your rights today. You are not alone, and you do not need to fight alone!

You can access the website here: https://abaurnahin.pk/

Press Coverage:

Nighat Dad on X shutdown on Geo News

DRF’s Executive Director Nighat Dad spoke with Geo News about the X shutdown in Pakistan. Nighat emphasized the importance of transparency in these actions, in accordance with international standards. And our local laws should address online disinformation and defamation without jeopardizing free speech. She went on to say that we need to work on improving existing laws as well as addressing the misuse of Generative AI right away.

Watch the entire interview here: https://web.facebook.com/watch/?v=302457409528246

A session with Suno FM Radio 89.4-96 ( Aao Bat Krain )

Anmol Sajjad delivered a virtual session on online harassment on 6th March 2024, with Suno FM Radio 89.4-96 with Bushra Iqbal. The session focused on online harassment in the digital era, and I also covered its legal aspects. It was focused on online harassment, its common forms, and its impact on physiological and social life. The prevention of online harassment and digital security was also discussed during the session.

Watch the entire interview here: https://www.facebook.com/share/v/WQgDhT53gFvENRsG/?mibextid=qi2Omg

Nighat Dad speaks about the Resolution to Ban Social Media | Bol News

DRF's Executive Director, Nighat Dad spoke with Bol TV about the resolution in the Senate to ban social media in Pakistan. She expressed her frustration with this way of thinking and how these actions damage Pakistan's image on the international stage. She went on to say that a blanket ban on social media or even internet shutdowns will not stop online disinformation; instead, we must identify the root of the problem and address it structurally, as well as reform our society's thinking that allows people to cause harm through online platforms.

Watch the entire interview here: https://www.youtube.com/watch?v=G2lmFfcTDgA

DRF in Press

Events:

Synthetic Elections: are we prepared for generative AI in 2024?

Organized by Demos and the UCL Digital Speech Lab, the event provided valuable perspectives on safeguarding democratic integrity amidst technological advancements. Nighat Dad shared her insights on navigating Generative AI in election cycles. The discussion centered around the impact of Generative AI, which has rapidly become a global concern. Policymakers, industry leaders, and the public convened to address the challenges posed by its exponential growth. Notable examples, such as AI-generated political audio and chatbot misinformation during elections, highlighted the urgency of the issue. She cautioned against overreacting to AI developments, emphasizing the need for balanced responses.

Watch the entire discussion here: https://www.youtube.com/watch?v=inaiJ6SJD08

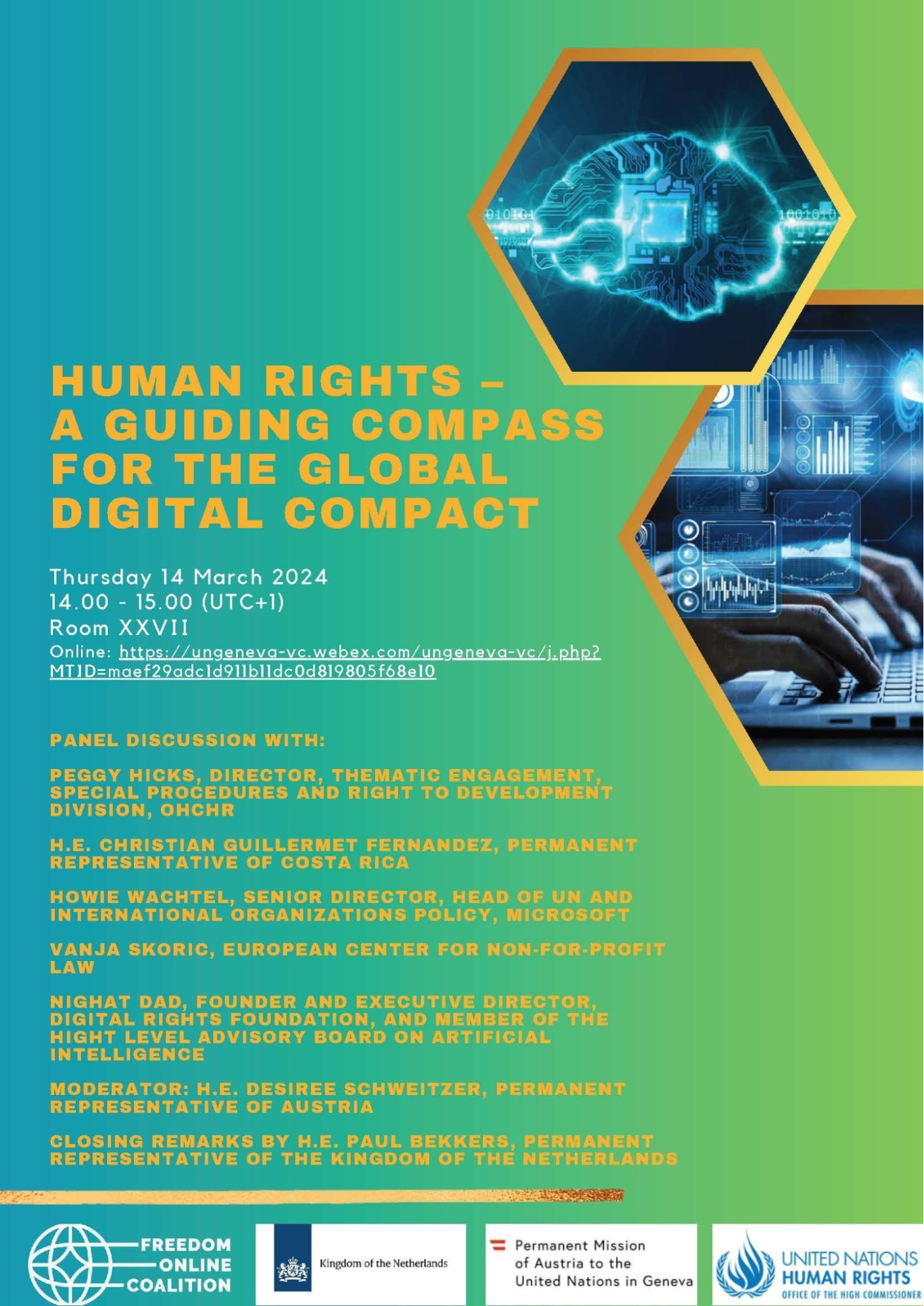

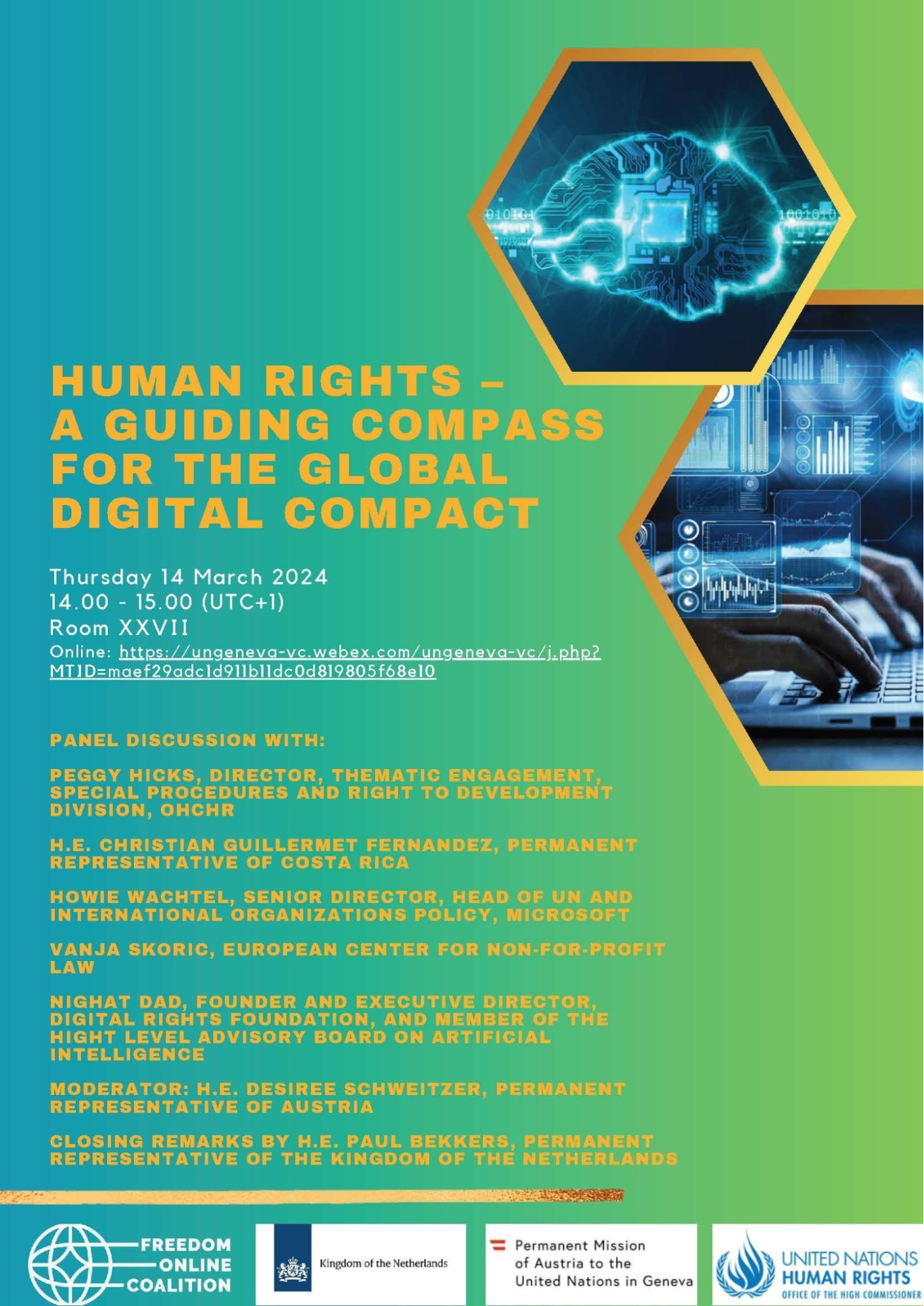

Nighat Dad on a panel at HRC55 of UNHRC

At #HRC55 of the United Nations Human Rights Council, Nighat Dad spoke on a panel ‘A Guiding Compass for the Global Digital Compact’ and she highlighted how human rights should be addressed in the framework of the Global Digital Compact. The Global Digital Compact is an initiative proposed in the United Nations Secretary-General António Guterres's Common Agenda.

Read more here: https://www.un.org/techenvoy/

South and Southeast Asia Regional Stakeholders Forum on Cyber Sexual and Gender-Based Violence

DRF also participated in the conference held by the Caught in the Web entitled ‘South and Southeast Asia Regional Stakeholders Forum on Cyber Sexual and Gender-Based Violence’ held in Colombo, Sri Lanka. During this, DRF’s Legal Manager, Irum Shujah, spoke in an “ Understanding the Perspective and Prospects of the Legal Sector” session and provided a Pakistani perspective to the participants. Overall Focus during the sessions was on sharing experiences, tools, lessons learned, and challenges of Civil Society Organizations (CSOs) engaged in addressing Cyber Sexual and Gender-Based Violence (CSGBV) and Online Gender-Based Harassment (OGHS) in South Asian countries, with a focus on discussing and disseminating these insights to facilitate learning and integration within their respective countries and across the region.

DRF at Global Partnership for Action on Gender-Based Online Harassment and Abuse’s Conference in Kenya

DRF also participated in the conference held by the Global Partnership entitled ‘Preventing and disrupting the spread of gendered disinformation in the context of electoral processes and democratic rollback’ held in Nairobi, Kenya. DRF’s participation provided the perspective on gendered disinformation used to target vulnerable identities including journalists, and the use of AI, the abuse of which was heightened during the recent elections, and what DRF is doing to counter such threats to safe online spaces and democratic processes.

Legal Clinic (Live on Instagram)

DRF conducted a live legal clinic on Instagram, creating an interactive space for public engagement. Both the Legal team and Helpline team participated and addressed the queries shared by the audience through comments. The clinic primarily focused on the nature of cases received by the Helpline Team at DRF and the subsequent process of DRF’s legal team processing these cases. The live clinic catered to questions asked by the audience, ranging from ideas about handling evidence to a discussion about the form of online harassment faced by the public at large.

Digital Literacy sessions in schools

This month DRF held four schools in Lahore with their Digital Citizens program. 735 students (females and males) and 44 teachers were informed about ways of staying safe in online spaces and reporting mechanisms in case of harassment or bullying. The participants were given gift bags with online safety resources and stationary.

A Session with Inspiring Women Pakistan

DRF delivered a virtual session on 31st March 2024. The session focused on online harassment in the digital era, and we covered its legal aspects as well. There were a total of 30 participants in the session; 15 girls were from Pakistan, and 15 were from South America. We focused on online harassment, its common forms, and its impact on physiological and social life. The prevention of online harassment and digital security was also discussed during the session.

A workshop on Perpetrator Data and Evaluating OGBV Tools

DRF attended an online session organized by the World Wide Web, focusing on Perpetrator Data and Evaluating OGBV Tools. The workshop centered on assessing the effectiveness of the emerging research roadmap in real-world scenarios and gathering feedback for refinement.

International Conference on Transformative Potential of Generative Artificial Intelligence, Law and Legal Education

Minahil Farooq from DRF’s Legal team participated in a two-day International Conference on the Transformative Potential of Generative Artificial Intelligence, organized by the University of Central Punjab. The sessions delve into valuable insights at the intersection of Artificial Intelligence and Legal education. The conference's objective was to explore strategies for integrating AI into legal education, providing a comprehensive understanding of its transformative potential.

Webinar on Navigating Gender Rights in the Judiciary in the Era of Artificial Intelligence

On 14 March 2024, DRF attended a webinar titled “Breaking Barriers: Navigating Gender Rights in the Judiciary in the Era of Artificial Intelligence” organized by National Law University Delhi, co hosted it with UNESCO and Lawyer's Hub on Artificial Intelligence in the Judiciary. In light of the rapid development of artificial intelligence (AI), this webinar included a panel discussion consisting of a retired senior judge, a policy analyst, and stakeholders from civil society. The webinar started with a discussion on the scope of AI, its role, and its implications within judicial systems. The discussion led to the European Ethical Charter for AI, highlighting its five core principles emphasizing respect for fundamental rights, non-discrimination, quality and security, transparency, impartiality, fairness, and user control. Insight was also provided about the inherent risks of AI-generated outcomes, particularly considering the situations when algorithms do not have adequate training, potentially leading to severe human rights violations. During the webinar, recommendations were also outlined, stressing the recognition of human biases in AI tools, understanding tool development purposes, maintaining system testing and feedback mechanisms, and promotion of awareness through training.

DRF Updates:

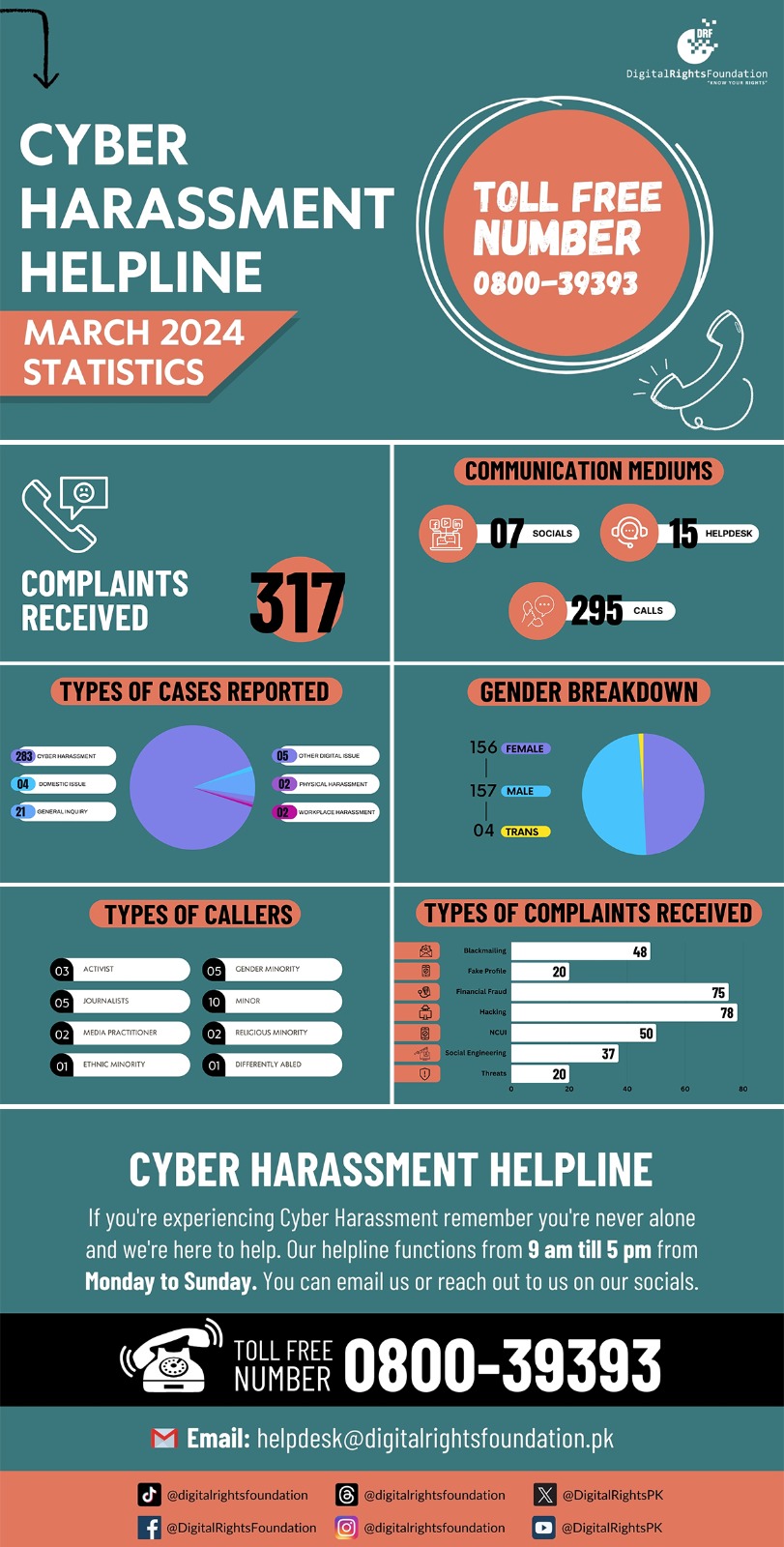

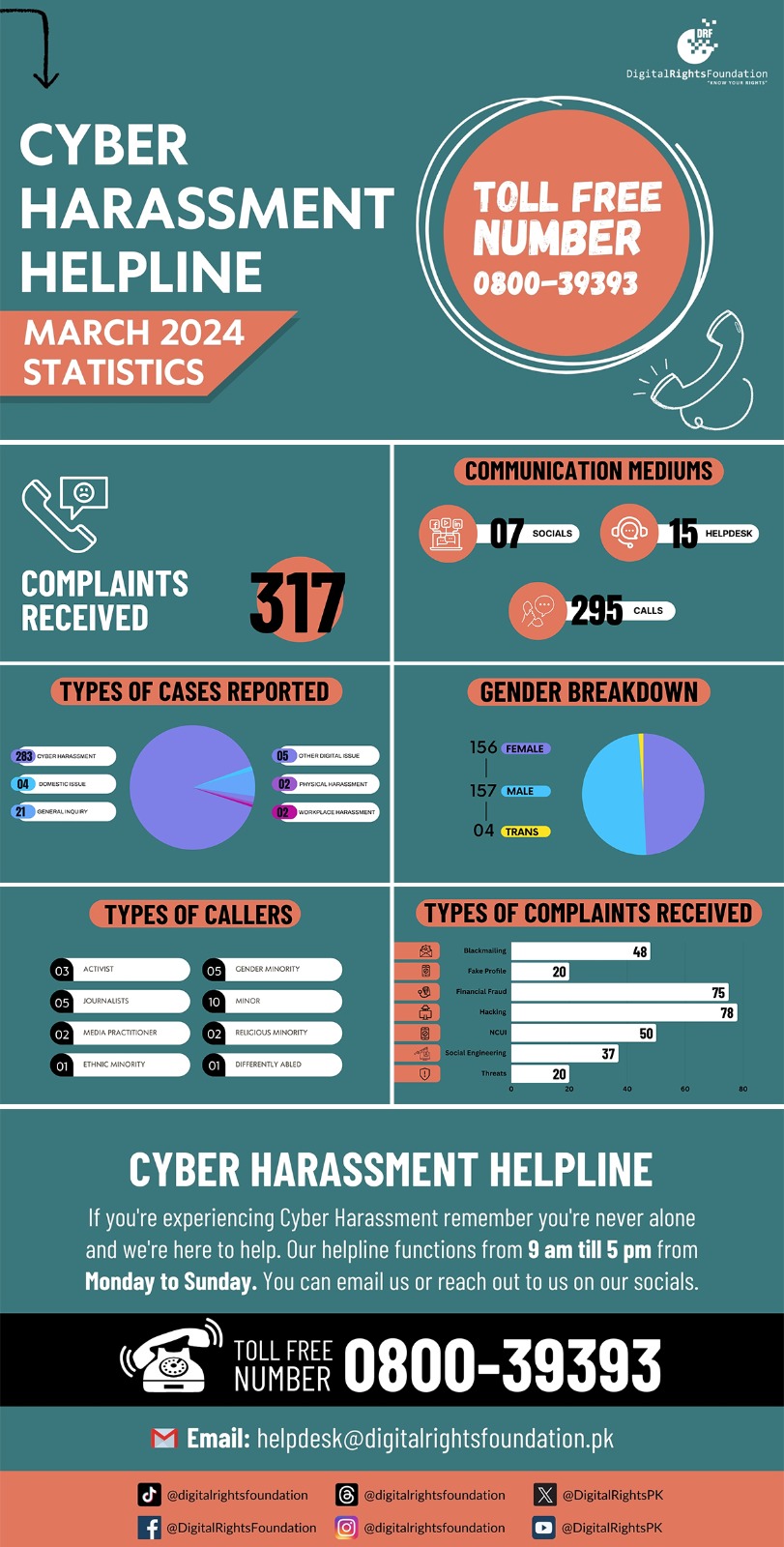

Cyber Harassment Helpline

The Cyber Harassment Helpline received 317 complaints in total in March 2024. 50 of these complaints were about NCUI, or Non Consensual Use of Information, which may include images, name, phone number etc. Our helpline prioritizes helping women and transgender individuals.

If you’re encountering a problem online, you can reach out to our helpline at 0800-39393, email us at [email protected] or reach out to us on our social media accounts. We’re available for assistance from 9 am to 5 pm, Monday to Sunday.

IWF Portal

DRF in collaboration with Internet Watch Foundation (IWF) and the Global Fund to End Violence Against Children launched a portal to combat children’s online safety in Pakistan. The new portal allows internet users in Pakistan to anonymously report child sexual abuse material in three different languages- English, Urdu, and Pashto.

www.report.iwf.org.uk/pk

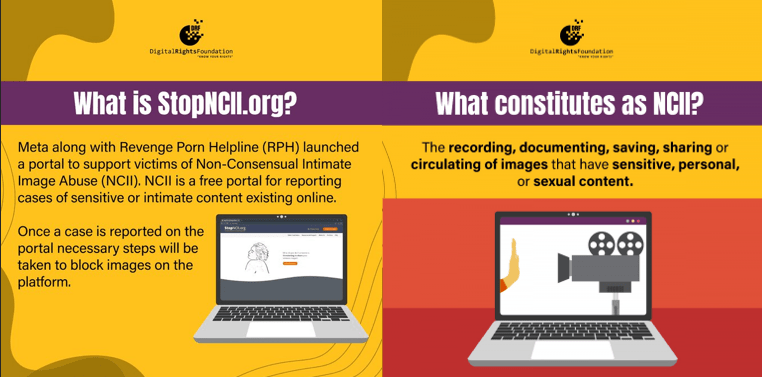

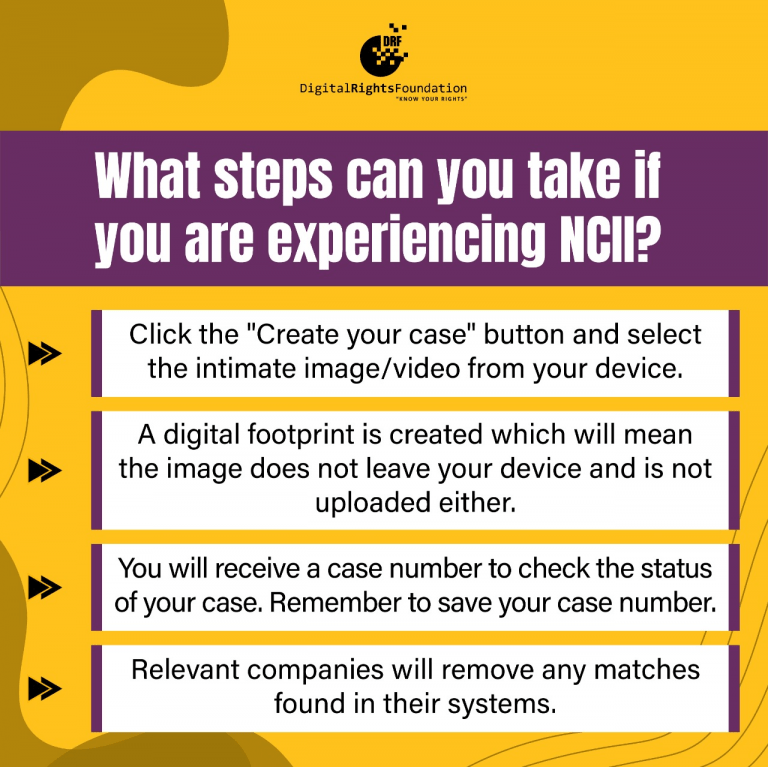

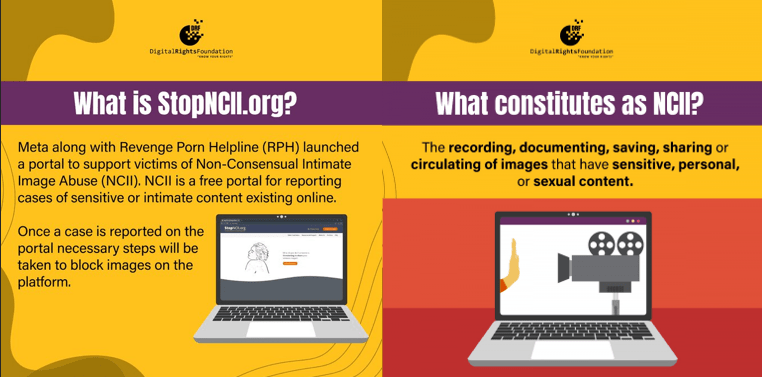

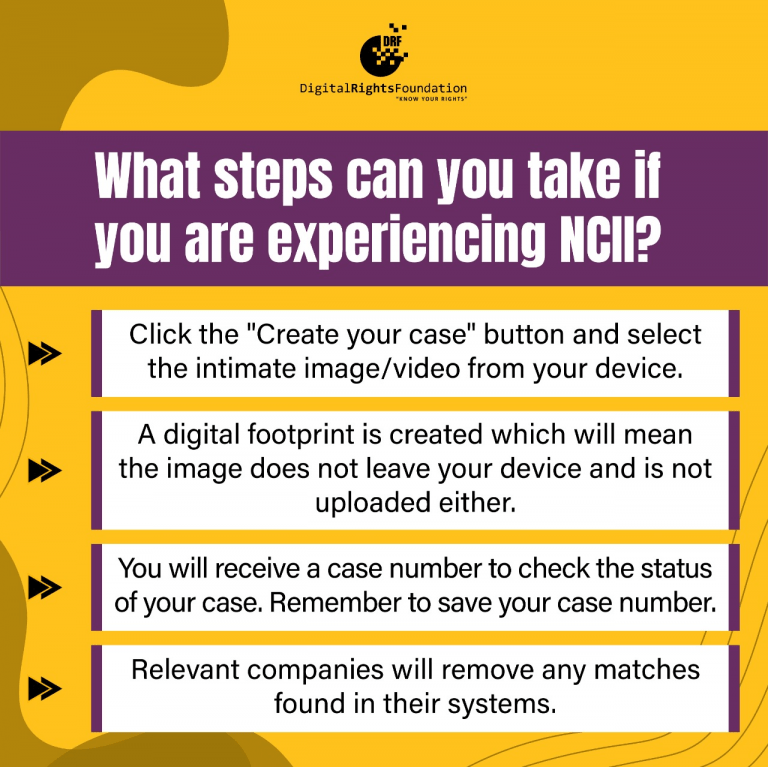

StopNCII.org

Meta along with Revenge Porn Helpline (RPH) has launched a portal to support victims of Non-Consensual Intimate Image Abuse (NCII). NCII is a free portal for reporting cases of sensitive or sexual content existing online. Once you report a case, the necessary steps will be taken to block the images from the platform.

https://stopncii.org/