Soon after the Citizens Protection (Against Online Harm) Rules, 2020 (the ‘Rules’) were notified in January 2020, Digital Rights Foundation (DRF) issued a statement in which concerns regarding the Rules were expressed. It was submitted, and it is reiterated, that the Rules restrict free speech and threaten the privacy of Pakistani citizens. Subsequently, we formulated our Legal Analysis highlighting therein the jurisdictional and substantive issues with the Rules in light of constitutional principles and precedent as well as larger policy questions.

When the Ministry of Information Technology & Telecommunication (MoITT) announced the formation of a committee to consult on the Rules, DRF, along with other advocacy groups, decided to boycott the consultation until and unless the Rules were de-notified. In a statement signed by over 100 organisations and individuals in Pakistan and released on Feb 29, 2020, the consultation process was termed a ‘smokescreen’. Maintaining our position on the consultation, through these Comments, we wish to elaborate on why we endorse(d) this stance, what we believe is wrong with the consultation and how these wrongs can be fixed. This will be followed by our objections to the Rules themselves.

Comments on the Consultations:

At the outset, we at DRF, would like to affirm our conviction to strive for a free and safe internet for all, especially women and minorities. We understand that in light of a fast-growing digital economy, rapidly expanding social media and continuous increase in the number of internet users in Pakistan, ensuring a safe online environment seems to be of much interest. While online spaces should be made safe, internet regulations should not come at the expense of fundamental rights guaranteed by the Constitution of Pakistan, 1973 and Pakistan’s international human rights commitments. A balance, therefore, has to be struck between fundamental rights and limitations imposed in the exercise of those rights. The only way, we believe, to achieve this balance is through meaningful consultations done with the stakeholders and the civil society in good faith.

The drafting process of the Rules, however, has been exclusionary and secretive from the start. It began with a complete lack of public engagement when the Rules were notified in February 2020- so much so that the Rules only became public knowledge after their notification. Given the serious and wide-ranging implications of the Rules, caution on the Government’s part and sustained collaboration with civil society was needed. Instead, the unexpected and sudden notification of the Rules caused alarm to nearly all stakeholders, including industry actors who issued a sharp rebuke of the Rules. It is respectfully submitted, that such practices do not resonate with the principles of ‘good faith.’

Almost immediately after the notification, the Rules drew sharp criticism locally and internationally. As a result, the Ministry of Information Technology & Telecommunication (MoITT) announced the formation of a committee to begin consultation on the Citizens Protection (Against Online Harm) Rules 2020. However, a consultation at the tail-end of the drafting process will do little good. Public participation before and during the official law-making process is far more significant than any end-phase activity and will be an eye-wash rather than a meaningful process.

We are concerned with not only the content of the regulations but also with how that content is to be agreed upon. The consultations, which are a reaction to the public outcry, fail to address either of the two. Experience shows that when people perceive a consultation to be insincere, they lose trust not only in the process but in the resulting regulations as well. Therefore, we urge the Federal Government to withdraw the existing Rules (a mere suspension of implementation of Rules is insufficient) through the same process by which they were notified. Any future Rules need to be co-created with meaningful participation of civil society from the start. Without a withdrawal of the Rules, the ‘reactionary’ consultations would be seen as a manipulation of the process to deflect criticism and not a genuine exercise to seek input. Without a withdrawal, it is unlikely the Rules would gain sufficient popularity or legitimacy. Once the necessary steps for withdrawal of notification have been taken, we request the government to issue an official statement mentioning therein that the legal status of the Rules is nothing more than a ‘proposed draft.’ This would mean that anything and everything in the Rules is open for discussion. This would not only demonstrate ‘good faith’ on the government’s part but also show its respect for freedom and democracy.

Even otherwise, it should be noted that the present consultation falls short of its desired purpose in as much as it seeks input with respect to the Rules only. The Preamble of the ‘Consultation Framework,’ posted on PTA’s website lays down the purpose of the consultation as follows: “in order to protect the citizens of Pakistan from the adverse effects of online unlawful content…” It is submitted that to make the internet ‘safe’ and to ‘protect’ the citizens would require more than regulations alone. The government should initiate a series of studies to ascertain other methods as well to effectively tackle online harms. Self-regulatory mechanisms for social media companies, educating users on safety and protective tools with respect to the internet and capacity-building of law-enforcement agencies to deal with cyber-crimes are some of the options that must be explored if the objective is to protect citizens from online unlawful content. These steps become all the more significant because online threats and harmful content continue to evolve. Additionally, such measures will reduce the burden on the regulators and provide a low-cost remedy to the users. It is reiterated that to effectively address this daunting task, a joint effort between the Government, civil society, law enforcement agencies as well as all social media companies is required.

To that end, a participatory, transparent and inclusive consultative process is needed. While this will help secure greater legitimacy and support for the Rules, at the same time, it can transform the relationship between citizens and their government. First and foremost, the presence of all stakeholders should be ensured. The Government should, in particular, identify those groups and communities that are most at risk and secure their representation in the consultation(s). If transparency and inclusiveness require the presence of all key stakeholders at the table, consensus demands that all voices be heard and considered. Therefore, we request that there should be mechanisms to ensure that the views and concerns of stakeholders are being seriously considered and that compromises are not necessarily based on majority positions.

Given the wide-ranging and serious implications of the Rules, it is necessary that the citizens’ groups and the society at large be kept informed at all stages of drafting these regulations. The drafters should also explain to the people why they have produced the text they have: what was the need for it and what factors they considered, what compromises they struck with the negotiators and why, and how they reached agreements on the final text.

Finally, input from the civil society and stakeholders should be sought to define words and certain phrases of the Rules that create penal offences. Many of the definitions and terms in the Rules, (which will be discussed shortly), are either loosely defined or lack clarity. It is suggested that discussions be held to determine clear meanings of such terms.

Objections to the Rules:

1: The Rules exceed the scope of its Parent Acts:

The Rules have been notified under provisions of the Pakistan Telecommunication (Re-organisation) Act, 1996 (PTRA) and the Prevention of Electronic Crimes Act (PECA) 2016 (hereinafter collectively referred to as the ‘Parent Acts’). The feedback form on the PTA website notes that the Rules are formulated “exercising statutory powers under PECA section 37 sub-section 2.” Under the Rules, the Pakistan Telecommunication Authority (PTA) is the designated Authority.

It is submitted that the scope and scale of action defined in the Rules go beyond the mandate given under the Parent Acts. The government is reminded that rules cannot impose or create new restrictions that are beyond the scope of the Parent Act(s).

It is observed that Rule 3 establishes the office of a National Coordinator and consolidates (through delegation) the powers granted to the Authority under PECA and PTRA to control online regulation. While we understand that Section 9 of PTRA allows delegation of powers, no concept of delegation of powers exists under PECA. Therefore, to pass on the powers contained in PECA to any third body is a violation of the said Act. Even under PTRA, powers can only be delegated to the chairman/chairperson, member or any other officer of PTA (re: Section 9), in which case, the autonomy and independence of the National Coordinator would remain questionable.

Without conceding that powers under PECA can be delegated to the National Coordinator, it is still submitted that Rule 7 goes beyond the scope of PECA (and also violates Article 19 of the Constitution which will be discussed later). Section 37(1) of PECA grants power to the Authority to “remove or block or issue directions for removal or blocking of access to an information through any information system....” While Section 37(1) confers limited powers to only remove or block access to an information, the powers to remove or block the whole information system or social media platform (conferred upon the National Coordinator by virtue of Rule 7) is a clear case of excessive delegation.

Further, the Rules require social media companies to deploy proactive mechanisms to ensure prevention of live streaming of fake news (Rule 4) and to remove, suspend or disable accounts or online content that spread fake news (Rule 5). Rule 5(f) also obligates a social media company that “if communicated by the Authority that online content is false, put a note to that effect along with the online content”. It is submitted that the powers to legislate on topics like fake news and misinformation are not granted under the Parent Acts. It is also unknown where the wide powers granted to the National Coordinator under Rule 3(2) to advise the Provincial and Federal Governments, issue directions to departments, authorities and agencies and to summon official representatives of social media companies stem from. These aforementioned Rules are, therefore, ultra vires the provisions of the Parent Acts.

Recommendations:

- Remove the body of the National Coordinator and the powers it wrongly assumed through PECA (re: the power to block online systems (rule 7)).

- If any such power is to be conferred, then limit that power to removal or suspension of an information on any information system as opposed to the power to block the entire online system.

- Establish a criteria for the selection and accountability of National Coordinator.

- Ensure autonomy and independence of the National Coordinator.

- Introduce mechanisms, through introduction of a public database or directory, to ensure transparency from any authority tasked with regulation and removal of content on the content removed and the reasons for such removal.

- Omit provisions regulating ‘fake news’ as they go beyond the scope of Parent Acts.

- Omit Rule 5(f) i.e. obligation to issue fake news correction, as it goes beyond the scope of the Parent Acts. Alternatively, social media companies should be urged to present ‘fact checks’ to any false information.

- Exclude from the powers of the National Coordinator the ability to advise the Provincial and Federal Governments, issue directions to departments, authorities and agencies and to summon official representatives of social media companies, contained in Rule 3(2), as they go beyond the scope of the Parent Acts.

2: Arbitrary Powers:

It is observed that the Rules have granted arbitrary and discretionary powers to the National Coordinator and, at the same time, have failed to provide any mechanisms against the misuse of these powers.

Rule 4 obligates a social media company to remove, suspend or disable access to any online content within twenty-four hours, and in emergency situations within six hours, after being intimated by the Authority that any particular online content is in contravention of any provision of the Act, or any other law, rule, regulation or instruction of the National Coordinator. An ‘emergency situation’ is also to be exclusively determined by this office. On interpretation or permissibility of any online content, as per Rule 4 (2), the opinion of the National Coordinator is to take precedence over any community standards and rules or guidelines devised by the social media company.

It is submitted that this grants unprecedented censorship powers to a newly appointed National Coordinator which has the sole discretion to determine what constitutes ‘objectionable’ content. These are extremely vague and arbitrary powers and the Rules fail to provide any checks and balances to ensure that such requests will be used in a just manner. It is trite law that a restriction on freedom of speech will be unreasonable if the law imposing the restriction has not provided any safeguards against arbitrary exercise of power. However, Rule 4 encourages arbitrary and random acts and bestows upon the National Coordinator unfettered discretion to regulate online content instead of even remotely attempting to provide any safeguards against abuse of power. Moreover, the power granted under Rule 5 to the National Coordinator to monitor falsehood of any online content adds to the unfettered powers of the National Coordinator. It is concerning that while the National Coordinator has been granted extensive powers, including quasi-judicial and legislative powers to determine what constitutes online harm, the qualifications, accountability, and selection procedure of the National Coordinator remains unclear. This will have a chilling effect on the content removal process as social media companies will rush content regulation decisions to comply with the restrictive time limit, rushing on particularly complicated cases of free speech that require deliberation and legal opinions. Furthermore, smaller social media companies, which do not have the resources and automated regulation capacities that big tech companies such as Facebook or Google possess, will be disproportionately burdened with urgent content removal instructions.

Further, Rule 6 requires a social media company to provide to the Investigation Agency any information, data, content or sub-content contained in any information system owned, managed or run by the respective social media company. It is unclear if the Investigating Agency is required to go through any legal or judicial procedure to make such a request or not and whether it is required to notify or report to a court on seizure of any such information. Given the current PECA regulations, there is still a legal process through which information or data of private users can be requested. This Rule, however, totally negates the current process and gives the National Coordinator sweeping powers to monitor online traffic. The power under Rule 6 exceeds the ambit of section 37 and runs parallel to data request procedures established with social media companies.

Recommendations:

- Re-consider the 24 hrs time limit for content removal. It would be unreasonable to impose such a strict timeline especially for content that relates to private wrongs/disputes such as defamation and complicated cases of free speech.

- Insert a “Stop the Clock” provision by listing out a set of criteria (such as seeking clarifications, technical infeasibility, etc.) under which the time limit would cease to apply to allow for due process and fair play in enforcing such requests.

- Formulate clear and predetermined rules and procedures for investigations, seizures, collection and sharing of data.

- Rule 4 should be amended and the Authority tasked with removal requests be required to give ‘cogent reasons for removal’ along with every content removal request. If those reasons are not satisfactory, the social media company should have the right to seek further clarifications.

- National Coordinator should not be the sole authority to determine what constitutes ‘objectionable’ online content; neither can this be left open for the National Coordinator to decide from time to time through its ‘instructions’.

- Remove the powers to request, obtain and provide data to Investigating Agencies.

3: vague Definitions:

It is an established law that “the language of the statute, and, in particular, a statute creating an offence, must be precise, definite and sufficiently objective so as to guard against an arbitrary and capricious action on part of the state functionaries.” Precise definitions are also important so that social media companies may regulate their conduct accordingly.

A fundamental flaw within these Rules is its vague, overly broad and extremely subjective definitions. For example, extremism (Rule 2(d)) is defined as ‘violent, vocal or active opposition to fundamental values of the state of Pakistan including...” It does not, however, define what constitutes or can be referred to as fundamental values of the state of Pakistan. Given the massive volume of content shared online, platforms may feel obliged to take a ‘better safe than sorry’ approach –which in this case would mean ‘take downfirst, ask questions later (or never).’ This threatens not only to impede legitimate operation of (and innovation in) services, but also to incentivize the removal of legitimate content. Moreover, an honest criticism or a fair comment made regarding the Federal Government, or any other state institution, runs the risk of being seen as ‘opposition,’ as this word also lacks clarity.

Similarly, while social media companies are required to ‘take due cognizance of the religious, cultural, ethnic and national security sensitivities of Pakistan’ (Rule 4(3)), the Rules fail to elaborate on these terms. Further, ‘fake news’ (Rule 4(4) & Rule 5(e)) has not been defined which adds to the ambiguity of the Rules. It is submitted that vague laws weaken the rule of law because they enable selective prosecution and interpretation, and arbitrary decision-making.

Rule 4(4) obligates a social media company to deploy proactive mechanisms to ensure prevention of live streaming of any content with regards to, amongst other things, ‘hate speech’ and ‘defamation.’ It should be noted that ‘hate speech’ and ‘defamation’ are both defined and considered as offences under PECA as well as the Pakistan Penal Code, 1860 (‘PPC’). It is also posited that determination of both these offences require a thorough investigation and a trial under both of these laws. It is submitted that if a trial and investigation is necessary to determine these offences then it would be nearly impossible for social media companies to ‘proactively’ prevent their live streaming. Additionally, social media companies already take down such material based on their community guidelines which cover areas such as public safety, hate speech and terrorist content. For instance, during the Christchurch terrorist attack, while Facebook was unable to take down the livestream as it was happening, AI technology and community guidelines were used to remove all instances of the video from the platform within hours of the incident. However, the Rules propose an unnecessary burden on social media companies and if any content is hastily removed as being hateful or defamatory, without a proper determination or investigation, then not only would such removal implicate the person who produced or transmitted such content (given these are penal offences under PECA & PPC) but also condemn them unheard. Even otherwise, hate speech and defamation are entirely contextual determinations, where the illegality of material is dependent on its impact. Impact on viewers is impossible for an automated system to assess, particularly before or during the material is being shared.

It is also noted that Rule 4(4) is in conflict with Section 38(5) of PECA, which expressly rejects imposition of any obligation on intermediaries or service providers to proactively monitor or filter material or content hosted, transmitted or made available on their platforms.

Recommendations:

- It is suggested that discussions be held amongst all stakeholders to determine clear and precise meanings of the following terms:

- Extremism

- Fundamental Values of the State of Pakistan

- Religious, cultural and ethical sensitivities of Pakistan

- National Security

- Fake News

- National Security

- Use alternate methods of countering hateful, extremist and speech through investment in independent fact-checking bodies, funds for organisations developing counter-speech against organisations tackling online speech against women, gender, religious and ethnic minorities.

- Formulate clear components of ‘active or vocal opposition’ to ensure it cannot be used to silence dissenting opinions.

- Omit Rule 4(4) as it violates Section 38 (5) of PECA.

- Content constituting ‘hate speech’ and ‘defamation’ should not be removed without a proper investigation.

4: Violates and Unreasonably restricts Fundamental Rights:

The Rules, as they stand, pose a serious danger to fundamental rights in the country. In particular, the breadth of the Rules’s restrictions, and the intrusive requirements that they place on social media platforms, would severely threaten online freedom of expression, right to privacy and information.

It is submitted that Rule 4 is a blatant violation of Article 19 (freedom of speech, etc.) of the Constitution. It exceeds the boundaries of permissible restrictions within the meaning of Article 19, lacks the necessary attributes of reasonableness and is extremely vague in nature. Article 19 states that restrictions on freedom of expression must be “reasonable” under the circumstances, and must be in aid of one of the legitimate state interests stated therein (“in the interests of the glory of Islam, integrity, security, or defence of Pakistan…”). The Rules, however, require all social media companies to remove or block online content if it is, among other things, in “contravention of instructions of the National Coordinator.” It is to be noted that deletion of data on the instructions of the National Coordinator does not fall under the permissible restrictions of Article 19 as it is an arbitrary criteria for the restriction of fundamental rights. Furthermore, a restriction on freedom of speech may only be placed in accordance with ‘law’ and an instruction passed by the National Coordinator does not qualify as law within the meaning of Article 19.

It must also be noted that Rule 7 (Blocking of Online System) is a violation of Article 19 of the Constitution which only provides the power to impose reasonable ‘restrictions’ on free speech in accordance with law. It is submitted that in today’s digital world, online systems allow individuals to obtain information, form, express and exchange ideas and are mediums through which people express their speech. Hence, entirely blocking an online system would be tantamount to blocking speech itself. The power to ‘block’ cannot be read under, inferred from, or assumed to be a part of the power to ‘restrict’ free speech. It was held, in Civil Aviation Authority Case, that “the predominant meanings of the said words (restrict and restriction) do not admit total prohibition. They connote the imposition of limitations of the bounds within which one can act...” Therefore, while Article 19 allows imposition of ‘restrictions’ on free speech, the power to ‘block’ an information system entirely exceeds the boundaries of permissible limitations under it and is a disproportionate method of achieving the goal of removing harmful content on the internet – rendering Rule 7 inconsistent with the Constitution as well (previously it was discussed Rule 7 goes beyond the scope of the Section 37 (1) of PECA).

As has already been discussed above, a restriction on freedom of speech will be unreasonable if the law imposing the restriction has not provided any safeguards against arbitrary exercise of power. Rule 4 violates this principle by encouraging arbitrary and random acts and bestows upon the National Coordinator unfettered discretion to regulate online content without providing any safeguards against abuse of power. The Rules do not formulate sufficient safeguards to ensure that the power extended to the National Coordinator would be exercised in a fair, just, and transparent manner. The power to declare any online content as ‘harmful’ and to search and seize data without the measures for questioning the authority concerns the state of privacy and free speech of the companies and that of the people.

The fact that the government has asked social media companies to provide all and any kind of user information or data in a ‘decrypted, readable and comprehensible format’, including private data shared through messaging applications like WhatsApp (Rule 6), and that too without defining any mechanisms for gaining access to data of anyone being investigated, just shows that it is neither concerned with the due procedure of the law nor is it concerned with the potential violations of citizens right to privacy.

Finally, Rule 5 obligates social media companies to put a note along-with any online content that is considered or interpreted to be ‘false’ by the National Coordinator. Not only does this provision add to the unfettered powers of the National Coordinator to be exercised arbitrarily but also makes the Coordinator in-charge of policing truth. This violates the principles of freely forming an ‘opinion’ (a right read under Article 19) as the National Coordinator now decides, or dictates, what is true and what is false.

Recommendations:

- Amend Rule 4 and exclude from it the words “the instructions of the National Coordinator” as the same violates Article 19 of the Constitution.

- Omit Rule 7 as it violates Article 19 and does not fall under the ‘reasonable restrictions’ allowed under the Constitution.

- Formulate rules and procedures for investigations, seizures and collection of data which are in line with due process safeguards.

- Rule 4 should be amended to require the regulatory body to give ‘cogent reasons for removal’ along with every content removal request. If those reasons are not satisfactory, the social media company should have the right to seek further clarifications.

- The authority tasked with content removal should not be the sole authority to determine what constitutes ‘objectionable’ online content; neither should it be left open for the authority to decide from time to time through its ‘instructions’.

5: Data Localisation:

Rule 5 obligates social media companies to register with the PTA within three months of coming into force of these Rules. It requires a social media company to establish a permanent registered office in Pakistan with a physical address located in Islamabad and to appoint a focal person based in Pakistan for coordination with the National Coordinator.

It is submitted that the requirement for registering with PTA and establishing a permanent registered office in Pakistan, before these companies can be granted permission to be viewed and/or provide services in Pakistan, is a move towards “data localisation”and challenges the borderless nature of the internet - a feature that is intrinsic to the internet itself. Forcing businesses to create a local presence is outside the norms of global business practice and can potentially force international social media companies to exit the country rather than invest further in Pakistan. It is unreasonable to expect social media companies to set up infrastructure in the country when the nature of the internet allows for it to be easily administered remotely. With an increase in compliance costs that come with incorporation of a company in Pakistan, companies across the globe including start-ups may have to reconsider serving users in Pakistan. Consequently, users in Pakistan including the local private sector may not be able to avail a variety of services required for carrying out day-to-day communication, online transactions, and trade/business related tasks. Many businesses and organisations across Pakistan rely on the services provided by social media companies, particularly during the Covid-19 pandemic when reliance on the internet has increased substantially, and will thus have an indirect impact on the economy as well. The proposed Rules requiring local incorporation and physical offices will also have a huge repercussion on taxation, foreign direct investment and other legal perspectives along with negatively impacting economic growth.

To effectively defend against cybercrimes and threats, companies protect user data and other critical information via a very small network of highly secure regional and global data centers staffed with uniquely skilled experts who are in scarce supply globally. These centers are equipped with advanced IT infrastructure that provides reliable and secure round-the-clock service. The clustering of highly-qualified staff and advanced equipment is a critical factor in the ability of institutions to safeguard data from increasingly sophisticated cyber-attacks.

Mandating the creation of a local data center will harm cybersecurity in Pakistan by:

- Creating additional entry points into IT systems for cyber criminals.

- Reducing the quality of cybersecurity in all facilities around the world by spreading cybersecurity resources (both people and systems) too thin.

- Forcing companies to disconnect systems and/or reduce services.

- Fragmenting the internet and impeding global coordination of cyber defense activities, which can only be achieved efficiently and at scale when and where the free flow of data is guaranteed.

Preventing the free flow of data:

- Creates artificial barriers to information-sharing and hinders global communication;

- Makes connectivity less affordable for people and businesses at a time when reducing connectivity costs is essential to expanding economic opportunity in Pakistan, boosting the digital economy and creating additional wealth;

- Undermines the viability and dependability of cloud-based services in a range of business sectors that are essential for a modern digital economy; and

- Slows GDP growth, stifles innovation, and lowers the quality of services available to domestic consumers and businesses.

The global nature of the Internet has democratized information which is available to anyone, anywhere around the world in an infinite variety of forms. The economies of scale achieved through globally located infrastructure have contributed to the affordability of services on the Internet, where several prominent services are available for free. Companies are able to provide these services to users even in markets that may not be financially sustainable as they don't have to incur additional cost of setting-up and running local offices and legal entities in each country where they offer services. Therefore, these Rules will harm consumer experience on the open internet, increasing costs to an extent that offering services/technologies to consumers in Pakistan becomes financially unviable.

Recommendations:

- Scrap Rule 5 and abandon the model of data localisation as it discourages business and weakens data security of servers;

- Develop transparent and legally-compliant data request and content removal mechanisms with social media companies as an alternative to the model proposed in Rule 5.

Concluding Remarks:

We have discussed that the current consultations lack the essentials of ‘good faith’ which demands reexamination of the entire framework. We have also discussed that the Rules exceed the scope of Parent Acts, accord arbitrary powers to the National Coordinator, uses vague definitions and unreasonably restricts fundamental rights which makes them liable to be struck down. In light of the above, we call upon the government to immediately withdraw the Rules and initiate the consultation process from scratch. The renewed consultation should premise around tackling ‘online harm’ instead of a discussion on the Rules alone. Consensus should be reached on the best ways to tackle online harms. This would require comprehensive planning, transparent and meaningful consultations with stakeholders and participation of the civil society. Until this is done, Digital Rights Foundation will disassociate itself from any government initiatives that are seen as ingenuine efforts to deflect criticism.

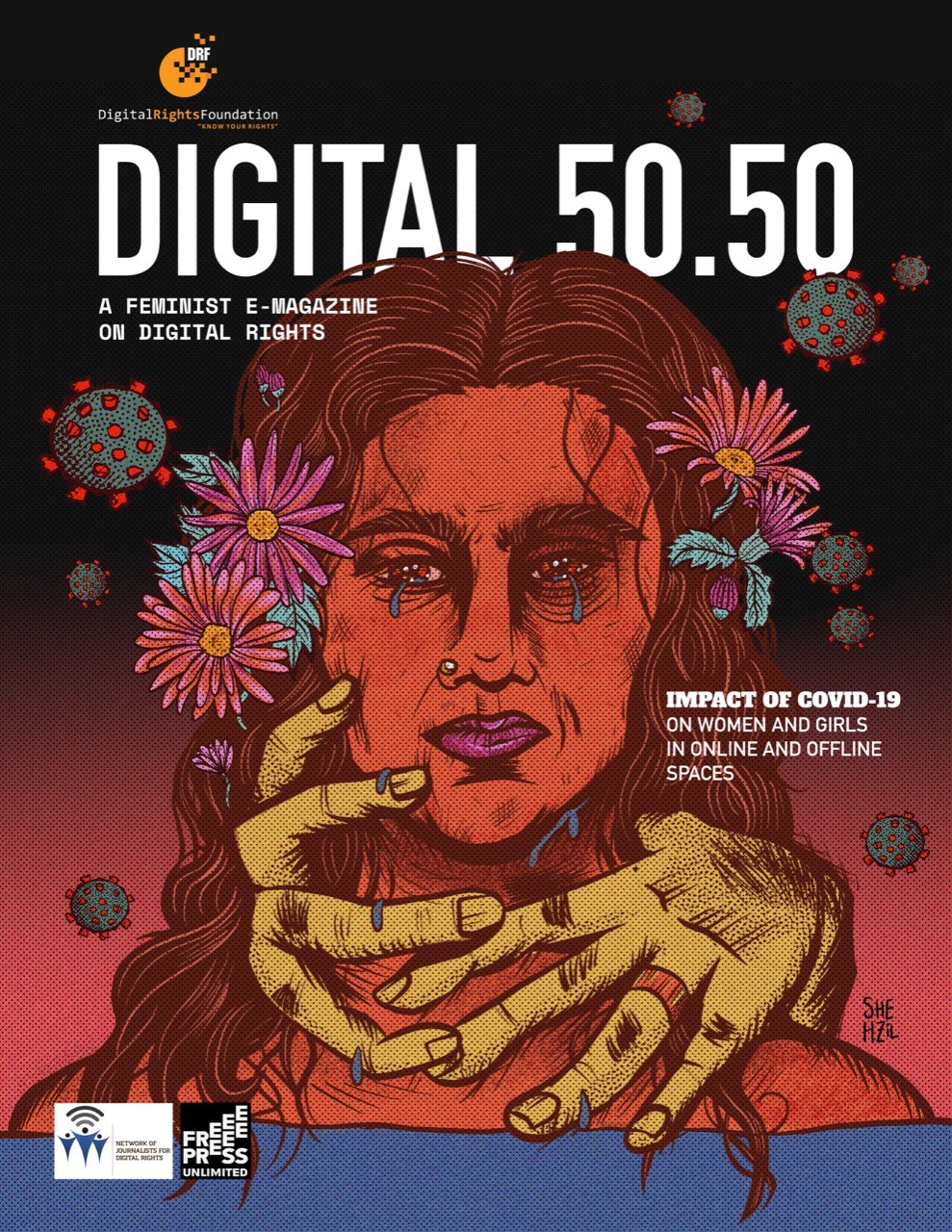

In collaboration with the Free Press Unlimited, Digital Rights Foundation participated in a campaign to highlight the work done by DRF and its Network of Women Journalists for Digital Rights to bring accurate and reliable information to the public amid the coronavirus pandemic. The campaign included a series of videos from DRF’s team on our role in bringing reliable information on digital rights to our beneficiaries and the general public and the launch of a toolkit for journalists and content creators on how to keep themselves safe considering the new set of risks and threats being posed in the online spaces. It also brought to the public, a series of visual stories from the journalistic frontline of Covid-19.

In collaboration with the Free Press Unlimited, Digital Rights Foundation participated in a campaign to highlight the work done by DRF and its Network of Women Journalists for Digital Rights to bring accurate and reliable information to the public amid the coronavirus pandemic. The campaign included a series of videos from DRF’s team on our role in bringing reliable information on digital rights to our beneficiaries and the general public and the launch of a toolkit for journalists and content creators on how to keep themselves safe considering the new set of risks and threats being posed in the online spaces. It also brought to the public, a series of visual stories from the journalistic frontline of Covid-19.